Perseverance Imagery, technical discussion of processing, cameras, etc. |

Perseverance Imagery, technical discussion of processing, cameras, etc. |

Feb 22 2021, 02:26 AM Feb 22 2021, 02:26 AM

Post

#1

|

||

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

Putting this here, for reference as the payload of the JSON feed link for Perseverence raws (see source in other thread here)

https://mars.nasa.gov/rss/api/?feed=raw_ima...;&extended= Looks like there's a ton of good data in addition to just the (PNG! Bayer color separated!) images This is for the first image shown on the page at the moment. CODE "images": [ { "extended": { "mastAz":"UNK", "mastEl":"UNK", "sclk":"667129493.453", "scaleFactor":"4", "xyz":"(0.0,0.0,0.0)", "subframeRect":"(1,1,1280,960)", "dimension":"(1280,960)" }, "sol":2, "attitude":"(0.415617,-0.00408664,-0.00947025,0.909481)", "image_files": { "medium":"https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00002/ids/edr/browse/rcam/RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01_800.jpg", "small":"https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00002/ids/edr/browse/rcam/RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01_320.jpg", "full_res":"https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00002/ids/edr/browse/rcam/RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01.png", "large":"https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00002/ids/edr/browse/rcam/RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01_1200.jpg" }, "imageid":"RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01", "camera": { "filter_name":"UNK", "camera_vector":"(-0.7838279435884001,0.600143487448691,0.15950407306054173)", "camera_model_component_list":"2.0;0.0;(46.176,2.97867,720.521);(-0.701049,0.00940617,0.713051);(8.39e-06,0.0168764,-0.00743155);(-0.00878744,-0.00869157,-0.00676256);(-1.05782,-0.466472,-0.724517);(-0.702572,0.0113481,0.711523);(-448.981,-528.002,453.359)", "camera_position":"(-1.05782,-0.466472,-0.724517)", "instrument":"REAR_HAZCAM_RIGHT", "camera_model_type":"CAHVORE" }, "caption":"NASA's Mars Perseverance rover acquired this image of the area in back of it using its onboard Rear Right Hazard Avoidance Camera. \n\n This image was acquired on Feb. 21, 2021 (Sol 2) at the local mean solar time of 15:37:11.", "sample_type":"Full", "date_taken_mars":"Sol-00002M15:37:11.985", "credit":"NASA/JPL-Caltech", "date_taken_utc":"2021-02-21T02:16:26Z", "json_link":"https://mars.nasa.gov/rss/api/?feed=raw_images&category=mars2020&feedtype=json&id=RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01", "link":"https://mars.nasa.gov/mars2020/multimedia/raw-images/?id=RRB_0002_0667129492_604ECM_N0010052AUT_04096_00_2I3J01", "drive":"52", "title":"Mars Perseverance Sol 2: Rear Right Hazard Avoidance Camera (Hazcam)", "site":1, "date_received":"2021-02-21T23:12:58Z" }, (with syntax color): Here's hoping that one of you skilled characters can make good use... |

|

|

|

||

|

Feb 23 2021, 12:17 PM Feb 23 2021, 12:17 PM

Post

#2

|

||

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

A small Perseverance update for Marslife, implementing the camera pointing data is a bit troublesome.

It suffers from a hit-and-miss accuracy. -------------------- |

|

|

|

||

Feb 23 2021, 02:40 PM Feb 23 2021, 02:40 PM

Post

#3

|

|

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

So cool!

Maybe they're still refining which way is up... lots of local coordinate frames to sort out and probably need some time (and the sun shots it looks like they just took, and some 3d processing of the local area, and some radio ranging) to get absolute position & orientation info. The instrument position details will certainly be different vs MSL. And in my skim of the data it looked to me like they re-jiggered some of the pointing data formats, did you see that too? Not sure which parts you're using. |

|

|

|

Feb 23 2021, 08:39 PM Feb 23 2021, 08:39 PM

Post

#4

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

For the less technically minded among us...Ryan Kinnet has put up a page that grabs a listing with links to the PNG files that you can then use any browser-plugin-batch-downloader with

https://twitter.com/rover_18/status/1364309922167488512 I tried Firefox with 'DownloadThemAll' and it worked perfectly. Meanwhile THIS GUY has python code to also grab the data https://twitter.com/kevinmgill/status/1364311336000258048 |

|

|

|

Feb 23 2021, 08:45 PM Feb 23 2021, 08:45 PM

Post

#5

|

|

Senior Member     Group: Members Posts: 3648 Joined: 1-October 05 From: Croatia Member No.: 523 |

Speaking of raw images, are these weird colorations due to something wrong with the pipeline or the cameras themselves (I'm thinking and hoping it's the former)?

Examples attached, one is a NavCam-L from yesterday (seems to have been pulled since), the other an RDC frame. -------------------- |

|

|

|

Feb 23 2021, 08:56 PM Feb 23 2021, 08:56 PM

Post

#6

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

Speaking of raw images, are these weird colorations due to something wrong with the pipeline... Yes. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 23 2021, 09:01 PM Feb 23 2021, 09:01 PM

Post

#7

|

|

Senior Member     Group: Members Posts: 3648 Joined: 1-October 05 From: Croatia Member No.: 523 |

Yes. That's reassuring to hear. One could sort of expect this from (well, almost) off-the shelf commercial EDL cameras, but the navcams are on a whole other level. New raw images just trickling in. Yep, looks like they started download on the full quality, Bayered RDC camera views. On a side note, I found it interesting how the color balance between RDC and DDC was so different. Yes, different detector resolutions, but same vendor. -------------------- |

|

|

|

Feb 23 2021, 09:35 PM Feb 23 2021, 09:35 PM

Post

#8

|

|

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

Yes, I hope this erronous positioning will improve when the rover is calibrated properly. Otherwise it is my code to blame...

I use the rover attitude, mastAz and mastEl values for camerapointing, I don't know if that has changed much since Curiosity. -------------------- |

|

|

|

Feb 24 2021, 01:30 AM Feb 24 2021, 01:30 AM

Post

#9

|

|

|

Merciless Robot     Group: Admin Posts: 8784 Joined: 8-December 05 From: Los Angeles Member No.: 602 |

Hey, all. This thread is for the imagewizards among us & will focus on the abundance of data Mars 2020 will provide. Please post your products, methods, and tips, and use this thread to share information & likewise learn from others.

-------------------- A few will take this knowledge and use this power of a dream realized as a force for change, an impetus for further discovery to make less ancient dreams real.

|

|

|

|

Feb 24 2021, 05:01 AM Feb 24 2021, 05:01 AM

Post

#10

|

|

|

Newbie  Group: Members Posts: 6 Joined: 6-August 12 Member No.: 6475 |

Okay, I hope this is the right forum for this question - one of the things I really like about both rovers having the ability to take stereo imagery is the ability to see stuff in 3D. Is there a utility out there that lets us view images in 3D using a VR headset? I've briefly searched around but haven't seen much. Anaglyphs are cool and everything but it leaves a bit to be desired.

|

|

|

|

Feb 24 2021, 01:44 PM Feb 24 2021, 01:44 PM

Post

#11

|

|

|

Junior Member   Group: Members Posts: 36 Joined: 28-May 08 Member No.: 4152 |

Okay, I hope this is the right forum for this question - one of the things I really like about both rovers having the ability to take stereo imagery is the ability to see stuff in 3D. Is there a utility out there that lets us view images in 3D using a VR headset? I've briefly searched around but haven't seen much. Anaglyphs are cool and everything but it leaves a bit to be desired. Stereo imagery viewed in a VR headset is a bit underwhelming - reconstructing geometry using photogrammetry to create a fully 3D representation of a landscape is much more interesting. I did this with some Curiosity imagery a few years ago, with fascinating results. If you have a SteamVR capable VR headset, you can have a look here: https://steamcommunity.com/sharedfiles/file...s/?id=928142301 - I typed up some fairly detailed notes in the description which will broadly apply to Perseverance imagery. (Full disclosure: I work for Valve, creators of SteamVR. The Mars stuff was a fun personal project which turned into something a bit larger...) Getting right back on topic for this thread - some notes on photogrammetry involving Perseverance imagery! Some decent camera parameters* to start with in Agisoft Metashape (formerly PhotoScan): Navcam Camera type: Fisheye Pixel size (mm): 0.0255 x 0.0255 (for 1280x960 images) Focal length (mm) 19.1 Hazcam Camera type: Fisheye Pixel size (mm): 0.0255 x 0.0255 (for 1280x960 images) Focal length (mm) 14 Using separate calibration profiles for left and right cameras may make sense - stuff worked better for Curiosity's navcams when I did this. (They're beautifully hand-made one-off scientific instruments, after all.) Metashape will further refine camera parameters once given these reasonable starting points. It's all looking like really exciting data to play around with - navcam imagery in high-resolution, full colour after a bit of processing. I'm starting to figure out debayering stuff - I'm sure that this thread will come in great use! * derived from The Mars 2020 Engineering Cameras and Microphone on the Perseverance Rover: A Next-Generation Imaging System for Mars Exploration : Table 2, Perseverance Navcam, Hazcam, and Cachecam characteristics |

|

|

|

Feb 24 2021, 04:22 PM Feb 24 2021, 04:22 PM

Post

#12

|

|

|

Newbie  Group: Members Posts: 6 Joined: 6-August 12 Member No.: 6475 |

Great! Thanks for the answer. I have an Index, so I'll check that out!

Edit: Just tried it - that's awesome! Gives a great sense of scale to the rover, and I liked all the point of interest spots. I'm surprised there isn't more stuff out there like this, it would be a fantastic educational tool and it's just fun to stand or sit around in for awhile. Stereo imagery viewed in a VR headset is a bit underwhelming - reconstructing geometry using photogrammetry to create a fully 3D representation of a landscape is much more interesting.

I did this with some Curiosity imagery a few years ago, with fascinating results. If you have a SteamVR capable VR headset, you can have a look here: https://steamcommunity.com/sharedfiles/file...s/?id=928142301 - I typed up some fairly detailed notes in the description which will broadly apply to Perseverance imagery. (Full disclosure: I work for Valve, creators of SteamVR. The Mars stuff was a fun personal project which turned into something a bit larger...) Getting right back on topic for this thread - some notes on photogrammetry involving Perseverance imagery! Some decent camera parameters* to start with in Agisoft Metashape (formerly PhotoScan): Navcam Camera type: Fisheye Pixel size (mm): 0.0255 x 0.0255 (for 1280x960 images) Focal length (mm) 19.1 Hazcam Camera type: Fisheye Pixel size (mm): 0.0255 x 0.0255 (for 1280x960 images) Focal length (mm) 14 Using separate calibration profiles for left and right cameras may make sense - stuff worked better for Curiosity's navcams when I did this. (They're beautifully hand-made one-off scientific instruments, after all.) Metashape will further refine camera parameters once given these reasonable starting points. It's all looking like really exciting data to play around with - navcam imagery in high-resolution, full colour after a bit of processing. I'm starting to figure out debayering stuff - I'm sure that this thread will come in great use! * derived from The Mars 2020 Engineering Cameras and Microphone on the Perseverance Rover: A Next-Generation Imaging System for Mars Exploration : Table 2, Perseverance Navcam, Hazcam, and Cachecam characteristics |

|

|

|

Feb 24 2021, 11:41 PM Feb 24 2021, 11:41 PM

Post

#13

|

|

|

Senior Member     Group: Members Posts: 1639 Joined: 5-March 05 From: Boulder, CO Member No.: 184 |

QUOTE There are what i assume to be quaternions (?) regarding attitude in the search/query api. see https://mars.nasa.gov/rss/api/?feed=raw_ima...04096_034085J01 for example. There are altazimuth compass bearings and elevation for the regular people as well, which could be used to project the images to a compass ball according to the metadata, rather than manually overlapping and tie-pointing the images. I do not know of software or libraries that can help with this, if you do please tell. Regarding the API "quaternion" coordinate info with the raw images, a LINUX program 'jq' is good for manipulating these. While this is just a starting point it would be interesting to see how an automated program might work with putting together a mosaic. I would just need some time to hook up some of my Fortran code with some scripts. CargoCult - can your SteamVR creation be viewed somehow with a regular computer? With Curiosity, sittingduck (YouTube link below) had some really nice videos moving through a 3D landscape that might be interesting to see in VR. Sittingduck back in 2016 had used a Blender Plug-In obtained from phase4. https://www.youtube.com/watch?v=7zW9yISB01Y...eature=youtu.be -------------------- Steve [ my home page and planetary maps page ]

|

|

|

|

Feb 25 2021, 01:13 AM Feb 25 2021, 01:13 AM

Post

#14

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

About image pointing, the algorithm I use for MSL, based on this post, seems to work fine for Percy, with the "rover_attitude" quaternion field replaced with "attitude". So for the "shiny rock" image:

https://mars.nasa.gov/mars2020-raw-images/p...6_034085J01.png I get elevation,azimuth = 1.2, 251.5 degrees, which looks about right. That presumably is for the centre of the FOV, which happens to correspond almost exactly with the shiny rock. |

|

|

|

Feb 25 2021, 02:04 AM Feb 25 2021, 02:04 AM

Post

#15

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

It's not great - but this is my agisoft metashape results with the Navcam images so far

https://sketchfab.com/3d-models/m2020-landi...7215aa7db2fb0c8 |

|

|

|

Feb 25 2021, 06:28 PM Feb 25 2021, 06:28 PM

Post

#16

|

|

|

Senior Member     Group: Members Posts: 1639 Joined: 5-March 05 From: Boulder, CO Member No.: 184 |

It's not great - but this is my agisoft metashape results with the Navcam images so far https://sketchfab.com/3d-models/m2020-landi...7215aa7db2fb0c8 Looks pretty nice given the available vantage points (one rover location). Is there a possibility the "1st Person" navigation mode would work with this model? -------------------- Steve [ my home page and planetary maps page ]

|

|

|

|

Feb 25 2021, 08:11 PM Feb 25 2021, 08:11 PM

Post

#17

|

|

|

Newbie  Group: Members Posts: 13 Joined: 21-December 19 Member No.: 8729 |

Stereo imagery viewed in a VR headset is a bit underwhelming - reconstructing geometry using photogrammetry to create a fully 3D representation of a landscape is much more interesting. I did this with some Curiosity imagery a few years ago, with fascinating results. If you have a SteamVR capable VR headset, you can have a look here: https://steamcommunity.com/sharedfiles/file...s/?id=928142301 - I typed up some fairly detailed notes in the description which will broadly apply to Perseverance imagery. (Full disclosure: I work for Valve, creators of SteamVR. The Mars stuff was a fun personal project which turned into something a bit larger...) Thats awsome! Great to see you here. I just ran into that two days ago. It is something I always wanted to do, but never fully found the time to work for a longer period of time. I have tried Photogrammetry on InSight, this is the result two years ago: https://www.youtube.com/watch?v=cBYAwTm_ArE...eature=youtu.be Big thanks for the info here an on steam! It will be surely very helpfull to others too. To stay on topic: I had a run with a short sequence of the true raw data from the down-look cams: https://www.youtube.com/watch?v=l4WKIoTjE4c...p;pbjreload=101 I will just wait for all the EDL data to download and will have a run with the data in Agisoft. I tried with the MARDI cam. While it was capable of clearly registering the images, I must have done something wrong, as the last image ended up "under the ground". Anyway the Perseverance data surely look promising in that matter. I am working on a stabilized 360 video as with the MARDI cam. This is a quick version of the underlaying layer simulating approximately the view from 10km above the surface. I made it from the debayered data. Propably impacted by the FFMPEG compression. |

|

|

|

Feb 25 2021, 09:00 PM Feb 25 2021, 09:00 PM

Post

#18

|

||

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

About image pointing, the algorithm I use for MSL, based on this post, seems to work fine for Percy, with the "rover_attitude" quaternion field replaced with "attitude". Thank you for this hint, fredk. I didn't realize the Spice toolkit works just as fine without kernels. For future use, does anyone know how to obtain the zoom value from the JSON information? Should it be derived from the CAHVOR data? -------------------- |

|

|

|

||

Feb 25 2021, 09:56 PM Feb 25 2021, 09:56 PM

Post

#19

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

For future use, does anyone know how to obtain the zoom value from the JSON information? Should it be derived from the CAHVOR data? Should be if the CAHVOR model is set correctly, which they may or may not be at this point. See https://github.com/bvnayak/CAHVOR_camera_model and https://agupubs.onlinelibrary.wiley.com/doi...29/2003JE002199 -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 25 2021, 11:03 PM Feb 25 2021, 11:03 PM

Post

#20

|

|

|

Junior Member   Group: Members Posts: 99 Joined: 17-September 07 Member No.: 3901 |

Do color calibration targets ever fade (change color) from solar (and worse) radiation on Mars?

How are they tested on Earth and proven to not fade? |

|

|

|

Feb 25 2021, 11:14 PM Feb 25 2021, 11:14 PM

Post

#21

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

Do color calibration targets ever fade (change color) from solar (and worse) radiation on Mars? How are they tested on Earth and proven to not fade? https://mastcamz.asu.edu/mars-in-full-color/ QUOTE At the University of Winnipeg, the effect of intense Mars-like ultraviolet (UV) light on the colors of the eight materials was studied, confirming that the materials will only change very little with UV-exposure through a long mission at the Martian surface. Typically, they get dusty before fading would be an issue. Hopefully these magnets work better than the last time. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 26 2021, 12:56 AM Feb 26 2021, 12:56 AM

Post

#22

|

|

|

Member    Group: Members Posts: 890 Joined: 18-November 08 Member No.: 4489 |

just came across a paper on the cameras and mic

"The Mars 2020 Engineering Cameras and Microphone on the Perseverance Rover: A Next-Generation Imaging System for Mars Exploration" https://link.springer.com/article/10.1007/s11214-020-00765-9 |

|

|

|

Feb 26 2021, 12:32 PM Feb 26 2021, 12:32 PM

Post

#23

|

|

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

Should be if the CAHVOR model is set correctly, which they may or may not be at this point. See https://github.com/bvnayak/CAHVOR_camera_model and https://agupubs.onlinelibrary.wiley.com/doi...29/2003JE002199 Thanks for the links. I tried the code but it gave very low values and fluctuating results for the Mastcam-Z focal length. Will try again when new images arrive. -------------------- |

|

|

|

Feb 26 2021, 04:44 PM Feb 26 2021, 04:44 PM

Post

#24

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

Thanks for the links. I tried the code but it gave very low values and fluctuating results for the Mastcam-Z focal length. Seems like, for the one case I looked at, that the values in the JSON are in the order VORCAH instead of CAHVOR as one would expect. I don't know if this is intentional or a bug. And I'm not sure if these models are actually correct anyway. But you could take a look. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 26 2021, 05:30 PM Feb 26 2021, 05:30 PM

Post

#25

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

Jim has put out a Mastcam-Z filename decode guide

https://mastcamz.asu.edu/decoding-the-raw-p...mage-filenames/ It includes digits in there that describe the focal length in mm as three digits. All the stereo pan images report 34mm for that - which matches |

|

|

|

Feb 26 2021, 05:35 PM Feb 26 2021, 05:35 PM

Post

#26

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

It includes digits in there that describe the focal length in mm as three digits. Oh yeah, duh. Well, it was much more interesting to extract it from the camera model FYI, widest angle of MCZ is 26mm. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 26 2021, 05:45 PM Feb 26 2021, 05:45 PM

Post

#27

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

Ahh ok - they miss spoke at yesterdays thing then. This matches MSL Mastcam Left then

|

|

|

|

Feb 26 2021, 06:15 PM Feb 26 2021, 06:15 PM

Post

#28

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

This matches MSL Mastcam Left then Correct. Original MCZ spec was for 34mm to 100mm but we ended up with some extra credit range and can do 26mm to 110mm. Typically values 26, 34, 48, 63, 79, 100, and 110 will be used, but AFAIK nobody is sure yet how they'll be chosen. Zoom has to be stowed at 26mm for driving, so that might motivate use of that setting sometimes. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 26 2021, 06:51 PM Feb 26 2021, 06:51 PM

Post

#29

|

||

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

Seems like, for the one case I looked at, that the values in the JSON are in the order VORCAH instead of CAHVOR as one would expect. So close. Processing the CAHVOR as VORCAH gave more plausible results, although one decimal off. It was a fun exercise but no longer nescessary... thanks for posting the decode guide Doug! -------------------- |

|

|

|

||

Feb 26 2021, 07:00 PM Feb 26 2021, 07:00 PM

Post

#30

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

So close. Processing the CAHVOR as VORCAH gave more plausible results, although one decimal off. Pixel size is 7.4e-6 m = 7.4e-3 mm, is that what you were using? -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Feb 26 2021, 07:10 PM Feb 26 2021, 07:10 PM

Post

#31

|

|

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

Pixel size is 7.4e-6 m = 7.4e-3 mm, is that what you were using? Ah thank you, I used 0.074 mm instead of 0.0074. Things are obviously ok now. -------------------- |

|

|

|

Feb 26 2021, 07:21 PM Feb 26 2021, 07:21 PM

Post

#32

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

In a previous post I estimated the horizontal FOV to be 19.5 degrees for an image with a "34" focal length filename field. I said there that that was near the maximum FOV of 19.2 deg, but I mistakenly used vertical FOV, not horizontal. My measured 19.5 deg corresponds very closely to the max horizontal 25.6 scaled from 26 to 34 mm, so everything is consistent with a 34 mm focal length (apart from the statement yesterday as Doug mentioned).

And we seem to have a little bonus of around 1608 horizontal pixels, vs the 1600 photoactive stated in Bell etal. |

|

|

|

Feb 27 2021, 11:19 PM Feb 27 2021, 11:19 PM

Post

#33

|

|

|

Forum Contributor     Group: Members Posts: 1372 Joined: 8-February 04 From: North East Florida, USA. Member No.: 11 |

Is there a way to tell the zoom used from the MastCamZ file names ?

|

|

|

|

Feb 27 2021, 11:31 PM Feb 27 2021, 11:31 PM

Post

#34

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

Is there a way to tell the zoom used from the MastCamZ file names ? Yes. See post #25 in this thread. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Mar 1 2021, 04:36 AM Mar 1 2021, 04:36 AM

Post

#35

|

|

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

It appears the JSON data for the Sol 9 images has corrected the CAHVORE formatting issue we saw in the earlier image metadata (i.e. in correct order).

I wonder if it was a different version effect from the cruise software load. CODE "camera": { "filter_name": "UNK", "camera_vector": "(0.6929857012250769,-0.7042489328574726,-0.1542862873579498)", "camera_model_component_list": "(1.10353,-0.008945,-0.729124);(0.884935,-0.17181,0.432865);(2606.49,1675.44,1273.54);(747.819,-326.9,2757.19);(0.885293,-0.171227,0.432363);(1e-06,0.00889,-0.006754);(-0.006127,0.010389,0.004541);2.0;0.0", "camera_position": "(1.10353,-0.008945,-0.729124)", "instrument": "FRONT_HAZCAM_LEFT_A", "camera_model_type": "CAHVORE" |

|

|

|

Mar 1 2021, 04:43 AM Mar 1 2021, 04:43 AM

Post

#36

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

The front hazcams have quite a significant toe-out between them. ~20deg.

page 17 See https://www.ncbi.nlm.nih.gov/pmc/articles/P...Article_765.pdf |

|

|

|

Mar 1 2021, 08:14 PM Mar 1 2021, 08:14 PM

Post

#37

|

|

|

Member    Group: Members Posts: 240 Joined: 18-July 06 Member No.: 981 |

|

|

|

|

Mar 1 2021, 11:07 PM Mar 1 2021, 11:07 PM

Post

#38

|

||

|

Member    Group: Members Posts: 808 Joined: 10-October 06 From: Maynard Mass USA Member No.: 1241 |

The cameras used for rover lookdown and lookup are AMS CMV20000

here is the datasheet from AMS https://ams.com/documents/20143/36005/CMV20...e1-428cb363ab0a Maybe the lookup camera can be used for stargazing, cloud studies, tau.... My debayer program is still a little wonky green (are there R and B multiplier 'factors' known for this camera?) I downsized it to fit in 3MB here -------------------- CLA CLL

|

|

|

|

||

Mar 2 2021, 03:19 AM Mar 2 2021, 03:19 AM

Post

#39

|

|

|

Member    Group: Members Posts: 248 Joined: 25-February 21 From: Waltham, Massachussetts, U.S.A. Member No.: 8974 |

Since I believe this may not have been mentioned, it is useful to know that the rss API json feed allows for just returning a single record based on id. It has an id query parameter.

So one can browse to find an image, say CODE https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00002/ids/edr/browse/edl/EDF_0002_0667111022_758ECV_N0010052EDLC00002_0010LUJ01.png and then use the id (filename without suffix) to get the record: CODE https://mars.nasa.gov/rss/api/?feed=raw_images&category=mars2020&feedtype=json&id=EDF_0002_0667111022_758ECV_N0010052EDLC00002_0010LUJ01 For example, this is interesting pointing out that the downlook rover camera does not have any interesting additional data. [edit:] If the filename for the image has trailing digits as in https://mars.nasa.gov/mars2020-raw-images/p...2_06_0LLJ01.png, for some cameras (not sure which ones) it is necessary to omit the trailing digits (here "01") for the json id: https://mars.nasa.gov/rss/api/?feed=raw_ima...AZ00102_07_0LLJ EDF_0002_0667111022_758ECV_N0010052EDLC00002_0010LUJ01 (for EDL) vs. FLE_0009_0667754529_115ECM_N0030000FHAZ00102_07_0LLJ (for FHAZ) -------------------- --

Andreas Plesch, andreasplesch at gmail dot com |

|

|

|

Mar 2 2021, 03:23 AM Mar 2 2021, 03:23 AM

Post

#40

|

|

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

Good info from Emily Lakdawalla on twitter:

QUOTE @elakdawalla 8:54 PM · Mar 1, 2021

- I just got off WebEx with Justin Maki, who leads of the Perseverance engineering camera team. I've learned a lot and gotten a lot of confused questions sorted out. I'll try to bang out a blog entry with lots of techy detail about raw images tomorrow. - The TL;DR: of the interview was: a lot of the things that are weird and confusing in the raw image metadata from sols 1-4 have to do with the rover being on the cruise flight software at the time. - For example, the cruise flight software did not "know" how to automatically create image thumbnails. So they had to instruct the rover computer with separate commands to make thumbnails for each image, which is why sequence IDs don't match up between thumbnail and full-res. - Many of the more confusing issues were solved by the flight software update. They're going to continue to tweak parameters over the next week or two, testing to see what modes they like best for returning their data, but before long they'll settle into some routines. - It's SO FUN to see this process working out in real time. They *could* hold all the images back until they're happy with their tweaking, but they're not. They're just shunting the images out, never mind the temporarily wonky metadata. |

|

|

|

Mar 2 2021, 03:26 AM Mar 2 2021, 03:26 AM

Post

#41

|

|

|

Member    Group: Members Posts: 890 Joined: 18-November 08 Member No.: 4489 |

QUOTE My debayer program is still a little wonky green have you tried the debayer in G'Mic https://gmic.eu/ CODE gmic input.png bayer2rgb -o output.png |

|

|

|

Mar 2 2021, 06:02 PM Mar 2 2021, 06:02 PM

Post

#42

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

Yeah, gmic gives the same wonky green/yellow cast. The particular deBayering interpolation algorithm shouldn't determine the overall hue (but may affect pixel-scale chroma details). What a deBayering by itself gives is known as "raw colour", and won't generally look right because the relative sensitivities of the RGB channels differ from those of the eye. DeBayered images released have similar casts, eg:

https://mars.nasa.gov/mars2020-raw-images/p...0_01_295J02.png A simple relative scaling between RGB channels, ie a whitebalance, should help a lot with these images. |

|

|

|

Mar 2 2021, 07:48 PM Mar 2 2021, 07:48 PM

Post

#43

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

A simple relative scaling between RGB channels, ie a whitebalance, should help a lot with these images. If the black level isn't maintained during the autostretch which is done on the public engineering cam frames, then such a simple fixed RGB scaling won't work for all frames and we're left with trial and error. So it could be that different tiles of a full frame navcam would need different colour adjustment. |

|

|

|

Mar 3 2021, 07:58 AM Mar 3 2021, 07:58 AM

Post

#44

|

|

|

Newbie  Group: Members Posts: 3 Joined: 28-February 21 Member No.: 8978 |

So, as I understand it - Perseverance has the ability compress frames into .MP4 files? And the landing videos posted by NASA on youtube were uploads of those MP4 files? And the rover will later send all of the full resolution frames (1000s of them) of the landing?

My question is: are those MP4 files available anywhere to download? Has NASA made them available? Because Youtube compresses videos a lot, and the original files would have a lot more detail. Thanks! |

|

|

|

Mar 3 2021, 05:54 PM Mar 3 2021, 05:54 PM

Post

#45

|

|

|

Member    Group: Members Posts: 240 Joined: 18-July 06 Member No.: 981 |

My question is: are those MP4 files available anywhere to download? Has NASA made them available? Great question. JPL ws able to get video footage very quickly and there are still a lot of individual frames to be published so these must have been videos created by the cameras and uplinked on Sol 1. Where are the raw video files do you suppose? Can we get our hands on them? |

|

|

|

Mar 4 2021, 10:57 AM Mar 4 2021, 10:57 AM

Post

#46

|

|

|

Newbie  Group: Members Posts: 3 Joined: 28-February 21 Member No.: 8978 |

Great question. JPL ws able to get video footage very quickly and there are still a lot of individual frames to be published so these must have been videos created by the cameras and uplinked on Sol 1. Where are the raw video files do you suppose? Can we get our hands on them? I found this file: https://mars.nasa.gov/system/downloadable_i..._deployment.mp4 It looks like it's the original MP4 file. I couldn't find any others though. |

|

|

|

Mar 4 2021, 03:37 PM Mar 4 2021, 03:37 PM

Post

#47

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

That video is slowed down and has duplicated frames, so must've been re-encoded from the original.

|

|

|

|

Mar 8 2021, 04:05 PM Mar 8 2021, 04:05 PM

Post

#48

|

|||

|

Newbie  Group: Members Posts: 2 Joined: 7-March 21 Member No.: 8986 |

Hello guys, I am new here and I have no expirience with pictures from Mars, so could be, this is obvious to you, but I struggle to find out how this works. I would like to know, if there is any way how to find out direction the rover is looking from a picture. As an example, i would love to know, if the hill

|

||

|

|

|||

Mar 8 2021, 05:19 PM Mar 8 2021, 05:19 PM

Post

#49

|

|

Member    Group: Members Posts: 700 Joined: 3-December 04 From: Boulder, Colorado, USA Member No.: 117 |

One easy way to get oriented is to look for Phil Stooke's circular projections which he posts regularly to support his mapping efforts. These always have north at the top, and show vertically-exaggerated images of features in the distance. The one linked here confirms your hunch about the identity of that mesa.

|

|

|

|

Mar 8 2021, 08:56 PM Mar 8 2021, 08:56 PM

Post

#50

|

|

The Insider    Group: Members Posts: 669 Joined: 3-May 04 Member No.: 73 |

|

|

|

|

Mar 9 2021, 01:48 AM Mar 9 2021, 01:48 AM

Post

#51

|

|

|

Member    Group: Members Posts: 910 Joined: 4-September 06 From: Boston Member No.: 1102 |

The Raw page has several types of images and cameras. I did not see listed the SuperCam (Perseverence ChemCam). Will these images eventually find their way to the raw page?

-------------------- |

|

|

|

Mar 9 2021, 04:15 AM Mar 9 2021, 04:15 AM

Post

#52

|

|

|

Forum Contributor     Group: Members Posts: 1372 Joined: 8-February 04 From: North East Florida, USA. Member No.: 11 |

|

|

|

|

Mar 9 2021, 07:09 AM Mar 9 2021, 07:09 AM

Post

#53

|

|

|

Newbie  Group: Members Posts: 2 Joined: 7-March 21 Member No.: 8986 |

@john_s @Pando thanks a lot guys

|

|

|

|

Mar 9 2021, 09:57 AM Mar 9 2021, 09:57 AM

Post

#54

|

|

|

Member    Group: Members Posts: 240 Joined: 18-July 06 Member No.: 981 |

I found this file: https://mars.nasa.gov/system/downloadable_i..._deployment.mp4 It looks like it's the original MP4 file. I couldn't find any others though. Thanks for finding it. I hope they release all the raw videos that were uplinked from the rover on Sols 0-1. Please JPL. This is historic stuff being first use of a legendary open source utility on another planet. Ffmpeg rocks the (solar) system. |

|

|

|

Mar 9 2021, 02:48 PM Mar 9 2021, 02:48 PM

Post

#55

|

|

|

Senior Member     Group: Members Posts: 2086 Joined: 13-February 10 From: Ontario Member No.: 5221 |

A silly clickbait video where someone zooms in on empty sky (https://www.youtube.com/watch?v=dQHYA0-Tfrk) got me thinking; how good would the Mastcam-Z be at astronomy? It would presumably be able to catch both moons if pointed at the right place and time (either during the day or at night, if power allows)?

I remember the Phobos-rise image taken early in Curiosity's mission ( http://www.midnightplanets.com/web/MSL/ima...58E01_DXXX.html ); Maztcam-Z could do a much better job now. |

|

|

|

Mar 9 2021, 03:48 PM Mar 9 2021, 03:48 PM

Post

#56

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

The MCZ specs are pretty similar to MSL mastcam, apart from the ability to zoom. The long end is 110mm at f/9.5 vs 100mm at f/10 for MR, and the sensor resolutions are very similar. So moon imaging should be very similar.

I thought the main advantages of MCZ were the ability to do stereo imaging with the same FOV in L and R cameras, as well as the intermediate zoom focal lengths. |

|

|

|

Mar 9 2021, 04:48 PM Mar 9 2021, 04:48 PM

Post

#57

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

So moon imaging should be very similar. Fred is correct, MCZ will not improve significantly on images like https://photojournal.jpl.nasa.gov/catalog/PIA17350 -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Mar 9 2021, 06:52 PM Mar 9 2021, 06:52 PM

Post

#58

|

|

|

Member    Group: Members Posts: 149 Joined: 18-June 08 Member No.: 4216 |

For astronomical imaging, there is also SkyCam.

|

|

|

|

Mar 9 2021, 08:35 PM Mar 9 2021, 08:35 PM

Post

#59

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

The MEDA SkyCam is a repurposed MER/MSL heritage Hazcam. They are INCREDIBLY optically dark. We're tried ( and failed ) to image night time features with MSL NavCams - even Earth and Venus were not visible. You're not going to get much, if anything, astronomical, through the MEDA SkyCam

|

|

|

|

Mar 9 2021, 09:18 PM Mar 9 2021, 09:18 PM

Post

#60

|

|

|

Member    Group: Members Posts: 149 Joined: 18-June 08 Member No.: 4216 |

Pity. It would have been a great edu resource.

|

|

|

|

Mar 9 2021, 10:01 PM Mar 9 2021, 10:01 PM

Post

#61

|

||

Senior Member     Group: Members Posts: 2429 Joined: 30-January 13 From: Penang, Malaysia. Member No.: 6853 |

Sol 18: Our first image from the SHERLOC/WATSON camera with its lens cap open

I did Bayer reconstruction of the raw image using GIMP with the add-on filter 'G'MIC-Qt' The image data drop out was seen on some of the earlier images with the lens cap closed, but in a different place, so hopefully not a problem. I noted that no focus motor count was issued on the raw page so it's not possible to establish the distance to the target or estimate the scale like we could do for MAHLI. Maybe that data will be in the JSON details? Raw image |

|

|

|

||

Mar 9 2021, 10:15 PM Mar 9 2021, 10:15 PM

Post

#62

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

I see no motor count at the json details page for that image:

https://mars.nasa.gov/rss/api/?feed=raw_ima...LC08001_0000LUJ The filter name field just says "open". |

|

|

|

Mar 9 2021, 11:47 PM Mar 9 2021, 11:47 PM

Post

#63

|

|

|

Member    Group: Members Posts: 248 Joined: 25-February 21 From: Waltham, Massachussetts, U.S.A. Member No.: 8974 |

Here is the full json in case something jumps out:

CODE {

"image": [ { "extended": { "mastAz": "307.733", "mastEl": "-20.2427", "sclk": "668555815.086", "scaleFactor": "1", "xyz": "(39.3213,34.1703,-0.00444177)", "subframeRect": "(1,1,1648,1200)", "dimension": "(1648,1200)" }, "link_related_sol": "https://mars.nasa.gov/mars2020/multimedia/raw-images/?sol=18", "sol": 18, "attitude": "(0.812911,-0.0114458,0.0116122,0.582159)", "json_link_related_sol": "https://mars.nasa.gov/rss/api/?feed=raw_images&category=mars2020&feedtype=json&sol=18", "image_files": { "medium": "https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00018/ids/edr/browse/shrlc/SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ02_800.jpg", "small": "https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00018/ids/edr/browse/shrlc/SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ02_320.jpg", "full_res": "https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00018/ids/edr/browse/shrlc/SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ02.png", "large": "https://mars.nasa.gov/mars2020-raw-images/pub/ods/surface/sol/00018/ids/edr/browse/shrlc/SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ02_1200.jpg" }, "imageid": "SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ", "camera": { "filter_name": "OPEN", "camera_vector": "(0.5740789607042541,-0.7421743420005331,0.3458476441336543)", "camera_model_component_list": "(2.08195,-0.111422,-1.69411);(0.577631,-0.741337,0.341707);(-1801.91,-2368.23,291.421);(946.46,-1240.2,-2497.62);(0.580824,-0.747997,0.321164);(0.000473,-0.032613,0.136778)", "camera_position": "(2.08195,-0.111422,-1.69411)", "instrument": "SHERLOC_WATSON", "camera_model_type": "CAHVOR" }, "caption": "NASA's Mars Perseverance rover acquired this image using its SHERLOC WATSON camera, located on the turret at the end of the rover's robotic arm. \n\nThis image was acquired on Mar. 9, 2021 (Sol 18) at the local mean solar time of 17:13:24.", "sample_type": "Full", "date_taken_mars": "Sol-00018M17:13:24.434", "credit": "NASA/JPL-Caltech", "date_taken_utc": "2021-03-09T17:56:16.000", "link": "https://mars.nasa.gov/mars2020/multimedia/raw-images/?id=SI0_0018_0668555815_008ECM_N0030578SRLC08001_0000LUJ", "link_related_camera": "https://mars.nasa.gov/mars2020/multimedia/raw-images/?camera=SHERLOC_WATSON&sol=18", "drive": "578", "title": "Mars Perseverance Sol 18: WATSON Camera ", "site": 3, "date_received": "2021-03-09T18:26:55Z" } ], "type": "mars2020-imagedetail-1.1", "mission": "mars2020" } -------------------- --

Andreas Plesch, andreasplesch at gmail dot com |

|

|

|

Mar 10 2021, 03:12 AM Mar 10 2021, 03:12 AM

Post

#64

|

|

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

If "camera_position" is correct at (2.08195,-0.111422,-1.69411) then WATSON's eyeball would be a fairly high 1.7m off the ground, and out ~1m in front of the body, just left (port) of centerline.

(For ref, in RNAV frame z=0 is ~ground level, positive down; hazcams are at x~ +1.1, z~ -0.7 and navcam at z~ -1.9.) An FOV of 37 deg diag would give about 1.1m diagonal in the image if pointed straight down. However if the axis in CAHVOR is correct then it's pointed ~20 deg below horizontal (it works out to match the "mast" azimuth & elevation values). Not convinced either of these is right - or my math, for that matter. |

|

|

|

Mar 10 2021, 10:41 AM Mar 10 2021, 10:41 AM

Post

#65

|

|

|

Newbie  Group: Members Posts: 3 Joined: 28-February 21 Member No.: 8978 |

What is the current best method for downloading all of the raw png files from all cameras?

|

|

|

|

Mar 10 2021, 03:17 PM Mar 10 2021, 03:17 PM

Post

#66

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

If "camera_position" is correct at (2.08195,-0.111422,-1.69411) then WATSON's eyeball would be a fairly high 1.7m off the ground... That looks wrong to me, I suspect a bug somewhere. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Mar 10 2021, 05:23 PM Mar 10 2021, 05:23 PM

Post

#67

|

|

|

Member    Group: Members Posts: 282 Joined: 18-June 04 Member No.: 84 |

Ah I didnt realise we wouldn't be seeing SUPERCAM RAW images like we did with Curiosity

|

|

|

|

Mar 10 2021, 09:50 PM Mar 10 2021, 09:50 PM

Post

#68

|

|

|

Member    Group: Members Posts: 240 Joined: 18-July 06 Member No.: 981 |

What is the current best method for downloading all of the raw png files from all cameras? https://rkinnett.github.io/roverpics/ Firefox/DownThemAll is a great combo and you can get the actual JSON files or just the URLs. Great service! |

|

|

|

Mar 10 2021, 11:50 PM Mar 10 2021, 11:50 PM

Post

#69

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

if the axis in CAHVOR is correct then it's pointed ~20 deg below horizontal (it works out to match the "mast" azimuth & elevation values) Using the camera_vector and attitude quaternion gives el,az = -19.3,19.5 deg in the LL frame (so az is relative to north), so the azimuth is quite different from the mastAz value. My guess is that mastEl and mastAz are for the RNAV frame (elevations are pretty close since the rover was close to level). |

|

|

|

Mar 11 2021, 08:02 AM Mar 11 2021, 08:02 AM

Post

#70

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

|

|

|

|

Mar 11 2021, 08:06 AM Mar 11 2021, 08:06 AM

Post

#71

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

Hello, there is no response when clicking Export selected image URLs.

|

|

|

|

Mar 11 2021, 08:07 AM Mar 11 2021, 08:07 AM

Post

#72

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

https://rkinnett.github.io/roverpics/ Firefox/DownThemAll is a great combo and you can get the actual JSON files or just the URLs. Great service! Hello, there is no response when clicking Export selected image URLs. |

|

|

|

Mar 11 2021, 01:07 PM Mar 11 2021, 01:07 PM

Post

#73

|

|

|

Member    Group: Members Posts: 890 Joined: 18-November 08 Member No.: 4489 |

QUOTE What software is there? Fast and easy? i use Gmic's built in program " bayer2rgb" https://gmic.eu/ it is a commandline tool CODE gmic InputImage.png bayer2rgb 1,1,1 -o OutputImage.png now they will be a bit "yellow/green" do to the contrast stretch that these png's have had done to them |

|

|

|

Mar 11 2021, 05:22 PM Mar 11 2021, 05:22 PM

Post

#74

|

|

Senior Member     Group: Members Posts: 2530 Joined: 20-April 05 Member No.: 321 |

The goals of a panoramic color camera and astrophotography are in many ways diametrically opposed. (That said, that image of Phobos and Deimos is simply awesome.) For imaging Phobos, Earth, Jupiter, etc. from Mars and getting the most impressive results, you'd want an instrument very different from Mastcam-Z. And for obvious reasons, that's not what this mission is about.

To do astrophotography from Mars (where, I imagine, the "seeing" would almost always be excellent due to the low pressure) the ideal instrument would be the same as on Earth – a big aperture on the telescope and a small pixel count, just big enough to capture the object of interest. Lots of area means your system is capturing meaningless data when you're looking at a small object surrounded by empty sky. (Some camera software allows you to capture just a small window of a larger frame; I have one with that capability, but I don't think there's any reason why you'd want that on Mars.) Color via a monochrome sensor and the ability to move different filters in front of it… Bayer color automatically reduces your resolution by about 1.5. It'd be fun to see what a ~20 cm aperture telescope could do from Mars, but I think we can imagine… take pictures of Jupiter as seen by amateurs on Earth and improve the resolution about 2x. It'd be amazing. Almost Voyager quality. Pictures of Earth would have about half the resolution of pictures of Mars as seen from Earth (because you'd only be looking at Earth's night side when the planets are closest). But I don't see this sort of add-on justifying the cost anytime soon. |

|

|

|

Mar 11 2021, 06:36 PM Mar 11 2021, 06:36 PM

Post

#75

|

|

|

Founder     Group: Chairman Posts: 14432 Joined: 8-February 04 Member No.: 1 |

i use Gmic's built in program " bayer2rgb" I must admit - I've found Gmic's debayer performance to be pretty ugly - especially at the edge of images. I've been using PIPP which can batch debayer images and I've been very pleased with the results https://sites.google.com/site/astropipp/ |

|

|

|

Mar 11 2021, 07:29 PM Mar 11 2021, 07:29 PM

Post

#76

|

|

Senior Member     Group: Members Posts: 1619 Joined: 12-February 06 From: Bergerac - FR Member No.: 678 |

As for me, I'm using ImageJ with Debayer plugin. Alongside with a batch script, I can do hundred of pictures in a very short time.

-------------------- |

|

|

|

Mar 11 2021, 08:00 PM Mar 11 2021, 08:00 PM

Post

#77

|

|

Member    Group: Members Posts: 691 Joined: 21-December 07 From: Clatskanie, Oregon Member No.: 3988 |

I'm using Pixinsight which is an astrophotography processing suite, it has a real nice debayer script. I use it for my astrophotography and it is expensive, 300 USD, but for those that can't afford that, the Planetary Image Pre-processor (PIPP) has a great batch debayer function in it which I've also used and it works great. On the plus side it's a free and easy program.

|

|

|

|

Mar 11 2021, 08:49 PM Mar 11 2021, 08:49 PM

Post

#78

|

|

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

The Marslife website uses an open source GPU shader to debayer images 'on-the-fly' which is blazingly fast.

While not really useful for non-coders I thought to share this solution as a reference. Pretty well documented, too. Efficient, high-quality Bayer demosaic filtering on GPUs by Morgan McGuire. -------------------- |

|

|

|

Mar 12 2021, 12:37 AM Mar 12 2021, 12:37 AM

Post

#79

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

The Marslife website uses an open source GPU shader to debayer images 'on-the-fly' which is blazingly fast. Thanks; I was wondering how you deBayered the mastcam sequences so fast. Incidentally the Malvar etal interpolation scheme you use is the same as that used onboard MSL to deBayer when jpegs are sent down; see the MSSS camera specification. (And that's the scheme I use in my own code.) Anyway, deBayering M20 frames is a breeze - dealing well with the jpeged Bayered images for MSL is hard work! |

|

|

|

Mar 12 2021, 01:32 AM Mar 12 2021, 01:32 AM

Post

#80

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

i use Gmic's built in program " bayer2rgb" https://gmic.eu/ it is a commandline tool CODE gmic InputImage.png bayer2rgb 1,1,1 -o OutputImage.png now they will be a bit "yellow/green" do to the contrast stretch that these png's have had done to them Thank you! |

|

|

|

Mar 12 2021, 01:48 AM Mar 12 2021, 01:48 AM

Post

#81

|

||

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

I must admit - I've found Gmic's debayer performance to be pretty ugly - especially at the edge of images. I've been using PIPP which can batch debayer images and I've been very pleased with the results https://sites.google.com/site/astropipp/ I don't know how to perform batch operations, only one picture can be output.can you teach me? Thank you! |

|

|

|

||

Mar 12 2021, 01:49 AM Mar 12 2021, 01:49 AM

Post

#82

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

I'm using Pixinsight which is an astrophotography processing suite, it has a real nice debayer script. I use it for my astrophotography and it is expensive, 300 USD, but for those that can't afford that, the Planetary Image Pre-processor (PIPP) has a great batch debayer function in it which I've also used and it works great. On the plus side it's a free and easy program. Yes, this software is expensive. I don't know how to perform batch operations, only one picture can be output.can you teach me? Thank you! |

|

|

|

Mar 12 2021, 03:02 AM Mar 12 2021, 03:02 AM

Post

#83

|

|

Member    Group: Members Posts: 691 Joined: 21-December 07 From: Clatskanie, Oregon Member No.: 3988 |

For PIPP, all you need to do is open up all of the images that you are going to debayer, then on the input tab select the option "Debayer Monochrome frames" and the pattern to RGGB. You can also choose the processing algorithm as well if you want VNG or Bilinear works good, you can experiment. To see a preview before final processing, you can click the "Test Options" button in the top right. If it looks good, you can move to the output tab and choose your output file options. Once ready, just go to the "Do Processing" tab and click "Start Processing". All the images that you loaded should be batch debayerd into a new folder.

|

|

|

|

Mar 12 2021, 04:58 AM Mar 12 2021, 04:58 AM

Post

#84

|

|||

Member    Group: Members Posts: 219 Joined: 14-November 11 From: Washington, DC Member No.: 6237 |

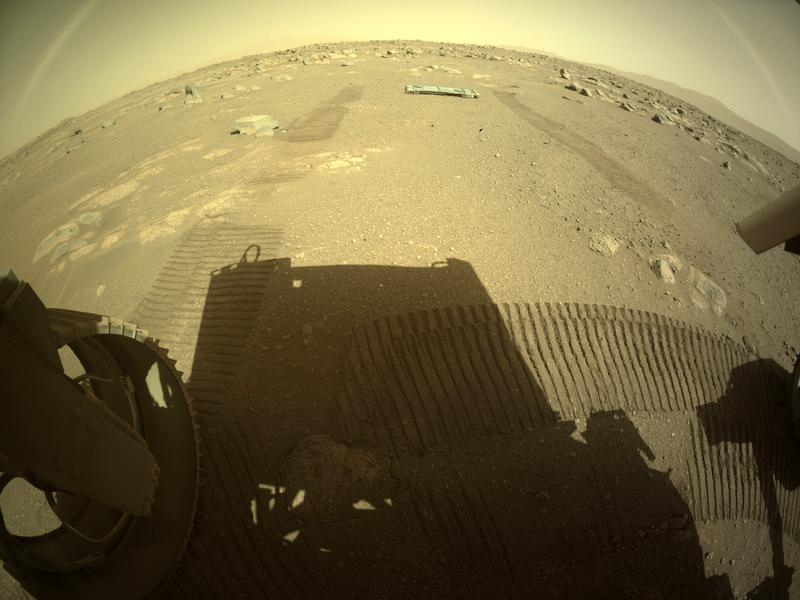

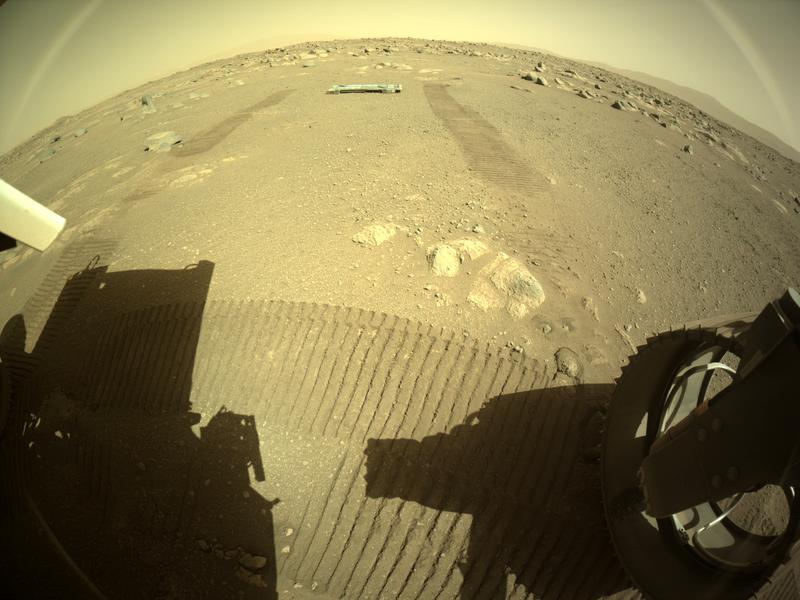

If "camera_position" is correct at (2.08195,-0.111422,-1.69411) then WATSON's eyeball would be a fairly high 1.7m off the ground, ... ... Not convinced ... Well, maybe I'm not totally nuts. These pictures are before & after the WATSON test images. Looks pretty high to me. Implies roughly the scale bar shown below, so the larger rocks are ~2cm. |

||

|

|

|||

Mar 12 2021, 06:54 AM Mar 12 2021, 06:54 AM

Post

#85

|

||

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

For PIPP, all you need to do is open up all of the images that you are going to debayer, then on the input tab select the option "Debayer Monochrome frames" and the pattern to RGGB. You can also choose the processing algorithm as well if you want VNG or Bilinear works good, you can experiment. To see a preview before final processing, you can click the "Test Options" button in the top right. If it looks good, you can move to the output tab and choose your output file options. Once ready, just go to the "Do Processing" tab and click "Start Processing". All the images that you loaded should be batch debayerd into a new folder. Thank you! The previous steps were successful, and finally failed.I don't know how to solve it. |

|

|

|

||

Mar 12 2021, 09:17 AM Mar 12 2021, 09:17 AM

Post

#86

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

|

|

|

|

Mar 12 2021, 10:14 AM Mar 12 2021, 10:14 AM

Post

#87

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

For PIPP, all you need to do is open up all of the images that you are going to debayer, then on the input tab select the option "Debayer Monochrome frames" and the pattern to RGGB. You can also choose the processing algorithm as well if you want VNG or Bilinear works good, you can experiment. To see a preview before final processing, you can click the "Test Options" button in the top right. If it looks good, you can move to the output tab and choose your output file options. Once ready, just go to the "Do Processing" tab and click "Start Processing". All the images that you loaded should be batch debayerd into a new folder. I found the reason, it is the system language problem. |

|

|

|

Mar 12 2021, 03:40 PM Mar 12 2021, 03:40 PM

Post

#88

|

|

|

Member    Group: Members Posts: 122 Joined: 19-June 07 Member No.: 2455 |

I just installed PIPP and it seems to be a very full featured program but I'm certainly lacking a great deal of info. I downloaded a Watson image of the underbelly that to my untrained eye seems like it's a candidate for debayering since there are lines and dots across the image that makes it look blurry and unprocessed. However, when processing it through PIPP it didn't seem like it did anything. The output looked pretty much like the input and it certainly was not in color. There were tons of options to choose but unfortunately the examples on the website were for some Jupiter video file not some single image. Any shortcuts someone can point to?

Edit: I did manage to get a color image debayered using Gmic by just trial and error. I'll post it in the helicopter area. |

|

|

|

Mar 12 2021, 04:54 PM Mar 12 2021, 04:54 PM

Post

#89

|

|

|

Senior Member     Group: Members Posts: 2517 Joined: 13-September 05 Member No.: 497 |

If "camera_position" is correct at (2.08195,-0.111422,-1.69411) then WATSON's eyeball would be a fairly high 1.7m off the ground... I take it back, perhaps this is correct. Should be possible to find the WATSON footprint in the front Hazcam image. I've asked if the focus position can be put back into the public data, but we don't have much control over that. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Mar 14 2021, 06:13 PM Mar 14 2021, 06:13 PM

Post

#90

|

|

Senior Member     Group: Members Posts: 4247 Joined: 17-January 05 Member No.: 152 |

Has anyone figured out how to sort the images at mars.nasa.gov/mars2020/multimedia/raw-images/ by time taken? Selecting a sort in the "sort by" box gives images out of order according to the listed LMST. (Eg, the first few images are out of order when you filter on sol 18.) The corresponding operation on the MSL site works fine.

The corresponding api call CODE https://mars.nasa.gov/rss/api/?feed=raw_images&category=mars2020&feedtype=json&num=50&page=0&order=sol+desc&&condition_2=18:sol:gte&condition_3=18:sol:lte&extended=sample_type::full, similarly gives an out of order json file, as do any alternatives I've tried for the "order=" option.Maybe this is related to the timestamp problems that are being worked on? |

|

|

|

Mar 14 2021, 08:17 PM Mar 14 2021, 08:17 PM

Post

#91

|

|

|

Junior Member   Group: Members Posts: 95 Joined: 11-January 07 From: Amsterdam Member No.: 1584 |

Has anyone figured out how to sort the images at mars.nasa.gov/mars2020/multimedia/raw-images/ by time taken? Not from the website directly. For the arm animation I made earlier I sorted the JSON entries on the spacecraft clock time which is given as "extended/sclk". (and can also be found as part of the image filename) -------------------- |

|

|

|

Mar 15 2021, 10:57 AM Mar 15 2021, 10:57 AM

Post

#92

|

|

|

Newbie  Group: Members Posts: 6 Joined: 20-April 05 Member No.: 265 |

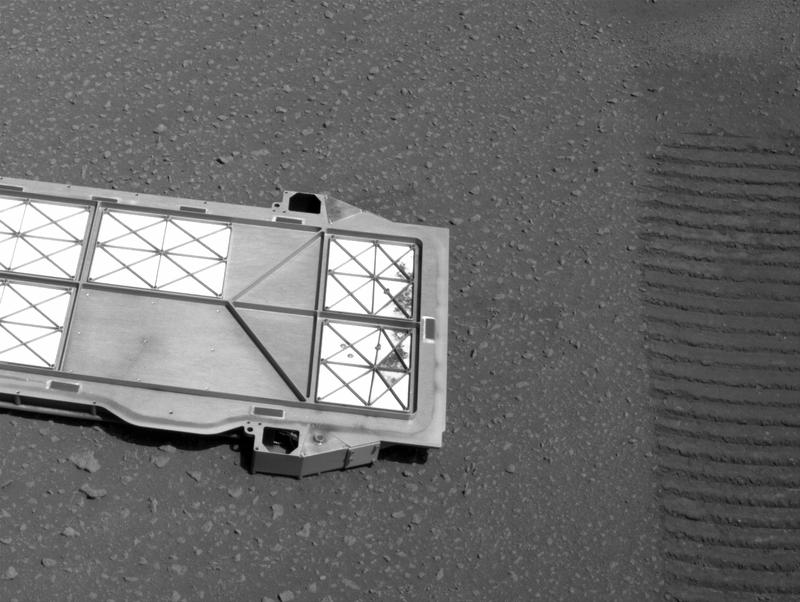

Does anybody know this plate's purpose?

Here are some other pictures:

|

|

|

|

Mar 15 2021, 11:22 AM Mar 15 2021, 11:22 AM

Post

#93

|

|

Senior Member     Group: Members Posts: 2429 Joined: 30-January 13 From: Penang, Malaysia. Member No.: 6853 |

Does anybody know this plate's purpose? It's a 'Bellypan' that protected the Adaptive Caching Assembly (ACA) since launch, but especially during the final part of EDL... Surface operations on Mars require the Sample Handling Assembly (SHA) to extend approximately 200 mm (~8 inches) below the Rover’s bellypan. Therefore, an ejectable belly-pan was implemented directly below the Adaptive Caching Assembly (ACA) volume, which was released after landing to provide the SHA with an unobstructed volume to extend into during operations. Surface features are then assessed via Rover imaging prior to SHA motion to prevent contact with potential obstacles below the Rover. Extracted from this PDF titled: Mars 2020 Rover Adaptive Caching Assembly: Caching Martian Samples for Potential Earth Return |

|

|

|

Mar 15 2021, 11:25 AM Mar 15 2021, 11:25 AM

Post

#94

|

|

|

Newbie  Group: Members Posts: 6 Joined: 20-April 05 Member No.: 265 |

It's a 'Bellypan' that protected the Adaptive Caching Assembly (ACA) since launch, but especially during the final part of EDL... Surface operations on Mars require the Sample Handling Assembly (SHA) to extend approximately 200 mm (~8 inches) below the Rover’s bellypan. Therefore, an ejectable belly-pan was implemented directly below the Adaptive Caching Assembly (ACA) volume, which was released after landing to provide the SHA with an unobstructed volume to extend into during operations. Surface features are then assessed via Rover imaging prior to SHA motion to prevent contact with potential obstacles below the Rover. Extracted from this PDF titled: Mars 2020 Rover Adaptive Caching Assembly: Caching Martian Samples for Potential Earth Return Thanks! |

|

|

|

Mar 15 2021, 09:19 PM Mar 15 2021, 09:19 PM

Post

#95

|

|

|

Junior Member   Group: Members Posts: 59 Joined: 4-July 08 Member No.: 4251 |

Does anybody know this plate's purpose? FYI this event is discussed in the regular thread: http://www.unmannedspaceflight.com/index.p...=8608&st=60 I think this thread here is supposed to be focused on imagery, e.g. technical discussion of processing, cameras, metadata etc. ADMIN: Chris is correct. Let's please post mission event pictures such as the panel deployment in the real-time progress threads (Perseverance Early Drives being the current one) and reserve this thread for technical discussion of the imagery systems and methods. |

|

|

|

Mar 16 2021, 12:02 PM Mar 16 2021, 12:02 PM

Post

#96

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

After I installed the required plugins,how to perform batch debayer in ImageJ and GIMP software?

Need an installation script, right? How to do it? |

|

|

|

Mar 16 2021, 12:39 PM Mar 16 2021, 12:39 PM

Post

#97

|

|

|

Member    Group: Members Posts: 102 Joined: 29-January 10 From: Poland Member No.: 5205 |

I use FitsWork to debayer images. It's great!

-------------------- Adam Hurcewicz from Poland

|

|

|

|

Mar 16 2021, 01:45 PM Mar 16 2021, 01:45 PM

Post

#98

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

|

|

|

|

Mar 16 2021, 11:39 PM Mar 16 2021, 11:39 PM

Post

#99

|

|

|

Member    Group: Members Posts: 808 Joined: 10-October 06 From: Maynard Mass USA Member No.: 1241 |

use the command-line interface to your tool (if it has one) and a batch file.

-------------------- CLA CLL

|

|

|

|

Mar 17 2021, 12:13 AM Mar 17 2021, 12:13 AM

Post

#100

|

|

|

Junior Member   Group: Members Posts: 20 Joined: 6-August 20 Member No.: 8852 |

|

|

|

|

|

|

Lo-Fi Version | Time is now: 23rd May 2024 - 12:51 PM |

|

RULES AND GUIDELINES Please read the Forum Rules and Guidelines before posting. IMAGE COPYRIGHT |

OPINIONS AND MODERATION Opinions expressed on UnmannedSpaceflight.com are those of the individual posters and do not necessarily reflect the opinions of UnmannedSpaceflight.com or The Planetary Society. The all-volunteer UnmannedSpaceflight.com moderation team is wholly independent of The Planetary Society. The Planetary Society has no influence over decisions made by the UnmannedSpaceflight.com moderators. |

SUPPORT THE FORUM Unmannedspaceflight.com is funded by the Planetary Society. Please consider supporting our work and many other projects by donating to the Society or becoming a member. |

|