3D Projecting JunoCam images back onto Jupiter using SPICE |

3D Projecting JunoCam images back onto Jupiter using SPICE |

Dec 12 2017, 02:50 PM Dec 12 2017, 02:50 PM

Post

#1

|

|

|

Newbie  Group: Members Posts: 16 Joined: 4-September 16 Member No.: 8038 |

Hi Everyone,

As part of an upcoming VR/VFX-based project, I'm attempting to project the raw images from JunoCam back onto a 3D model of Jupiter, the goal being to do this precisely enough that multiple images from a specific perijove can be viewed on the same model. However, Iím currently having one or two issues calculating the correct JunoCam rotation values, and was hoping someone with more experience might be able to point me in the right direction! My process for processing a single raw image is as follows :

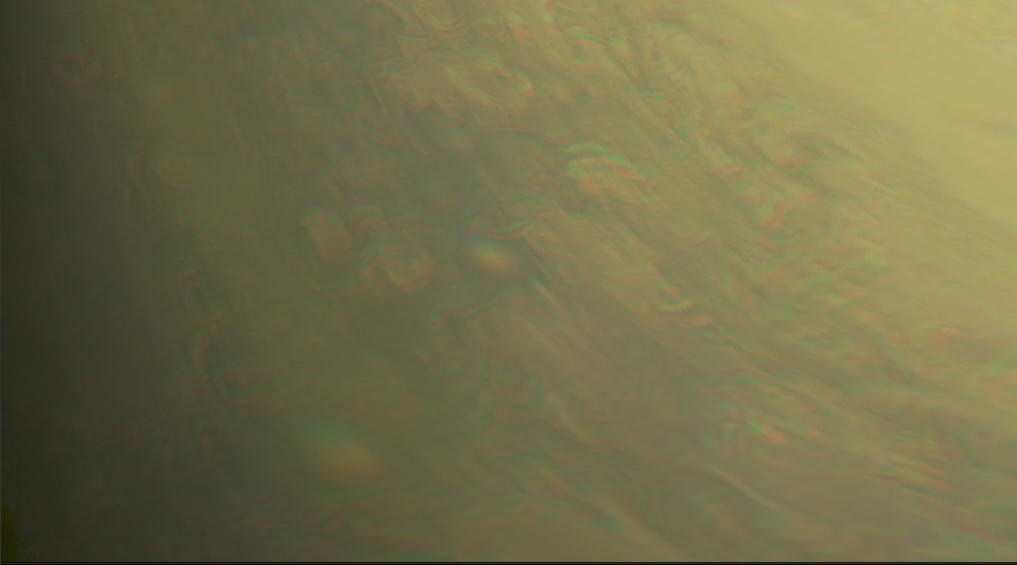

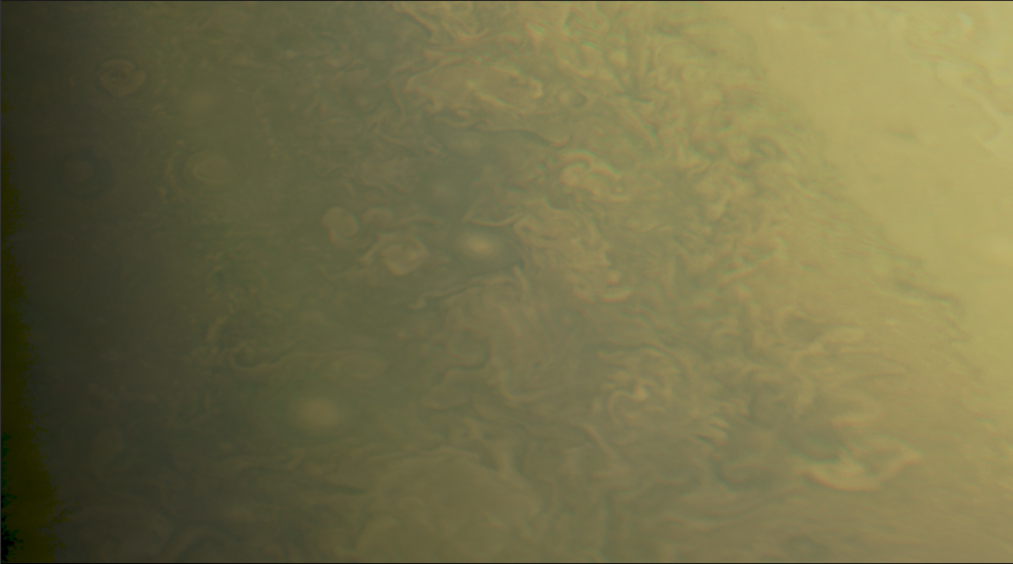

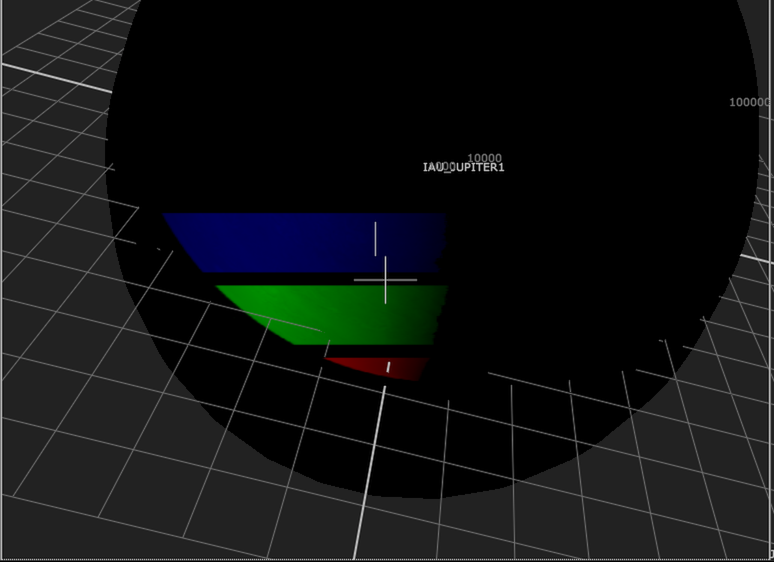

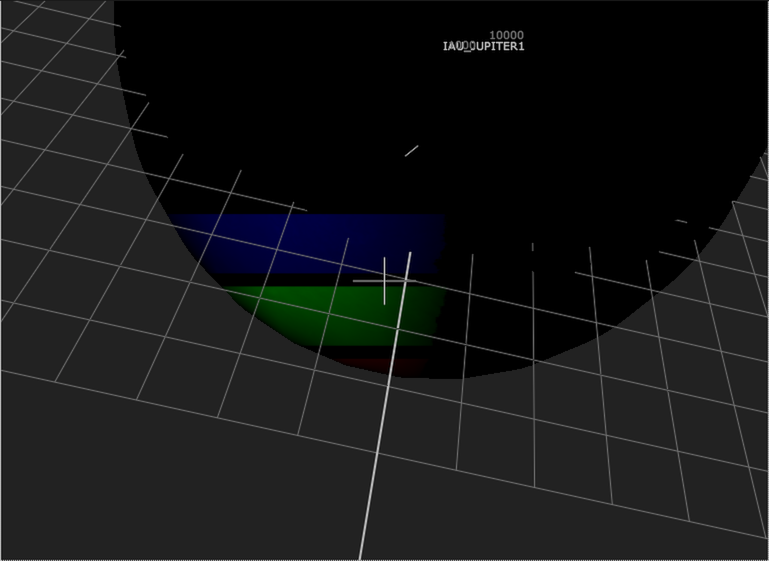

The frame transformations I'm calculating are currently : J2000 >> IAU_JUPITER >> JUNO_SPACECRAFT >> JUNO_JUNOCAM_CUBE >> JUNO_JUNOCAM. Here's the snippet of Spiceypy code used : CODE #Calculate the rotation offset between J2000 and IAU_JUPITER rotationMatrix = spice.pxform("J2000", "IAU_JUPITER", exposureTimeET) rotationAngles = rotationMatrixToEulerAngles(rotationMatrix) j2000ToIAUJupiterRotations = radiansXYZtoDegreesXYZ(rotationAngles) #Get the positions of JUNO_SPACECRAFT relative to JUPITER in the IAU_JUPITER frame, ignoring corrections junoPosition, lt = spice.spkpos('JUNO_SPACECRAFT', exposureTimeET, 'IAU_JUPITER', 'NONE', 'JUPITER') #Get the rotation of JUNO_SPACECRAFT relative to the IAU_JUPITER frame rotationMatrix = spice.pxform("IAU_JUPITER", "JUNO_SPACECRAFT", exposureTimeET) rotationAngles = rotationMatrixToEulerAngles(rotationMatrix) junoRotations = radiansXYZtoDegreesXYZ(rotationAngles) #Get the rotation of the JUNO_JUNOCAM_CUBE to the SPACECRAFT, based upon data in juno_v12.tf rotationMatrix = np.matrix([[-0.0059163, -0.0142817, -0.9998805], [0.0023828, -0.9998954, 0.0142678], [-0.9999797, -0.0022981, 0.0059497]]) rotationAngles = rotationMatrixToEulerAngles(rotationMatrix) junoCubeRotations = radiansXYZtoDegreesXYZ(rotationAngles) #Get the rotation of JUNO_JUNOCAM to JUNO_JUNOCAM_CUBE, based upon data in juno_v12.tf junoCamRotations = [0.583, -0.469, 0.69] The resultant position coordinates and euler angles are then transferred to a matching hierarchy of objects in 3D. The result is a JUNO_JUNOCAM 3D camera that should in theory be seeing exactly what is shown in the matching original exposure from steps 1-2. Using the 8th exposure (calculated time 2017-05-19 07:07:11.520680) of this image taken during perijove 6, zooming in on the final projected result gives me the following image :  As you can see, the RGB alignment is close, but not quite perfect. After doing some additional experimentation, I realised that the solution was to add an additional X rotation of -0.195į to the camera for exposure 1, -0.195į*2 for exposure 2, -0.195į*3 for exposure 3 etc. Applying this additional rotation gives me the following image :  Still not perfect as this value is no doubt slightly inaccurate, but itís certainly a lot closer. The consistency of this additional rotation (plus the fact that the value required changes between different raw images) makes me think that Iím perhaps missing something obvious in my code above! (I am admittedly not yet compensating for planetary rotation but I wouldn't expect that to cause a misalignment of this magnitude). Problem 2 is that in addition to the alignment offset between exposures, there is another global alignment offset across the entire image-set. Looking again at the 8th exposure, this time in 3D space, we see the following :  The RGB bands are from our single exposure, which has been projected through our 3D JunoCam onto the black sphere, which is my stand-in for Jupiter (sitting in the exact centre of the 3D space). As you can see, there is an alignment mismatch. After yet more experimentation, I found that applying a rotation of approximately [-9.2į, 1.7į, -1.2į] to the entire range of exposures (at the JUNOCAM level) more closely aligns our 8th exposure with it's expected position on Jupiter's surface, however this does introduce a very slight drift in subsequent exposures :  I hope that all makes some sense! I'm (probably fairly obviously) new to SPICE, but after reading multiple tutorials/forum posts elsewhere, I still can't seem to pinpoint the one or two things I'm no doubt misunderstanding! I'm incredibly excited about what's possible once I iron out the issues in this process, so if anyone has any suggestions, I'd certainly love to hear them! Thanks very much in advance! Matt |

|

|

|

|

Dec 13 2017, 01:03 AM Dec 13 2017, 01:03 AM

Post

#2

|

|

IMG to PNG GOD     Group: Moderator Posts: 2250 Joined: 19-February 04 From: Near fire and ice Member No.: 38 |

Another possible issue is that at least in my case, I always need to make small corrections to the pointing. I suspect it's mainly because of this from the kernel mentioned above by Mike:

QUOTE "We have found that there is a fixed bias of 61.88 msec in the start time with a possible jitter of order 20 msec relative to the reported value... The pointing error I get is typically 3-10 pixels. However, it seems obvious to me that here something else is going on; even a 10 pixel pointing error results in much better color alignment (except maybe near the limb). And this reminds me I really need to spruce up my own Juno-related SPICE code. It's really messy (including lots of code I commented out) because considerable experimentation was required following the Earth flyby to get everything working correctly (including a section of code to optionally correct the pointing). And just in case: Be sure you are using up to date kernels. New versions of some of the instrument/frame kernels were released a few months ago. The new versions are significantly more accurate than the old ones (but nevertheless the old ones didn't result in a significant color misalignment). |

|

|

|

Dec 18 2017, 12:00 AM Dec 18 2017, 12:00 AM

Post

#3

|

|

|

Member    Group: Members Posts: 140 Joined: 22-July 14 Member No.: 7220 |

Another possible issue is that at least in my case, I always need to make small corrections to the pointing. I suspect it's mainly because of this from the kernel mentioned above by Mike: The pointing error I get is typically 3-10 pixels. However, it seems obvious to me that here something else is going on; even a 10 pixel pointing error results in much better color alignment (except maybe near the limb). And this reminds me I really need to spruce up my own Juno-related SPICE code. It's really messy (including lots of code I commented out) because considerable experimentation was required following the Earth flyby to get everything working correctly (including a section of code to optionally correct the pointing). And just in case: Be sure you are using up to date kernels. New versions of some of the instrument/frame kernels were released a few months ago. The new versions are significantly more accurate than the old ones (but nevertheless the old ones didn't result in a significant color misalignment). One thing I have found in my most recent project is to use the specific ids for the Red, Green, and Blue framelets and compute position and pointing separately based on those. When I'm computing the visible ray surface intercept, I'm doing something similar to: CODE JUNO_JUNOCAM_METHANE = -61504 JUNO_JUNOCAM_BLUE = -61501 JUNO_JUNOCAM = -61500 JUNO_JUNOCAM_GREEN = -61502 JUNO_JUNOCAM_RED = -61503 def surface_point_for_channel_at_et(channel, et): shape, frame, bsight, n, bounds = spice.getfov(channel, 4, 32, 32) spoint, etemit, srfvec = spice.sincpt("Ellipsoid", "JUPITER", et, "IAU_JUPITER", "CN+S", "JUNO", frame, bsight) radius, lon, lat = spice.reclat(spoint) lon = math.degrees(lon) lat = math.degrees(lat) return lat, lon, radius et = spice.str2et("2016-DEC-11 17:14:13.003") spoint_red = surface_point_for_channel_at_et(JUNO_JUNOCAM_RED, et) spoint_green = surface_point_for_channel_at_et(JUNO_JUNOCAM_GREEN, et) spoint_blue = surface_point_for_channel_at_et(JUNO_JUNOCAM_BLUE, et) You'll see that at the exact same time, the boresight intercepts will be different. I wonder if this might help resolve your problem. -- Kevin |

|

|

|

Dec 18 2017, 01:45 AM Dec 18 2017, 01:45 AM

Post

#4

|

|

|

Senior Member     Group: Members Posts: 2511 Joined: 13-September 05 Member No.: 497 |

One thing I have found in my most recent project is to use the specific ids for the Red, Green, and Blue framelets and compute position and pointing separately based on those. You can try that if you want to, but I'm not sure if those boresights have been corrected for distortion -- we use the JUNO_JUNOCAM frame in our recommended processing. -------------------- Disclaimer: This post is based on public information only. Any opinions are my own.

|

|

|

|

Matt Brealey 3D Projecting JunoCam images back onto Jupiter using SPICE Dec 12 2017, 02:50 PM

Matt Brealey 3D Projecting JunoCam images back onto Jupiter using SPICE Dec 12 2017, 02:50 PM

Gerald With 80.84 exposures per Juno rotation you should ... Dec 12 2017, 06:24 PM

Gerald With 80.84 exposures per Juno rotation you should ... Dec 12 2017, 06:24 PM

mcaplinger QUOTE (Matt Brealey @ Dec 12 2017, 06:50 ... Dec 12 2017, 11:31 PM

mcaplinger QUOTE (Matt Brealey @ Dec 12 2017, 06:50 ... Dec 12 2017, 11:31 PM

Matt Brealey Hi Everyone,

Thanks so much for the responses... Dec 18 2017, 03:54 PM

Matt Brealey Hi Everyone,

Thanks so much for the responses... Dec 18 2017, 03:54 PM

Kevin Gill I'm glad you figured out a solution! The s... Dec 18 2017, 04:09 PM

Kevin Gill I'm glad you figured out a solution! The s... Dec 18 2017, 04:09 PM

Brian Swift Question for everyone with a raw pipeline using th... Dec 18 2017, 06:50 PM

Brian Swift Question for everyone with a raw pipeline using th... Dec 18 2017, 06:50 PM

mcaplinger QUOTE (Brian Swift @ Dec 18 2017, 10:50 A... Dec 19 2017, 05:31 PM

mcaplinger QUOTE (Brian Swift @ Dec 18 2017, 10:50 A... Dec 19 2017, 05:31 PM

Brian Swift QUOTE (mcaplinger @ Dec 19 2017, 09:31 AM... Dec 21 2017, 06:16 PM

Brian Swift QUOTE (mcaplinger @ Dec 19 2017, 09:31 AM... Dec 21 2017, 06:16 PM  |

|

Lo-Fi Version | Time is now: 25th April 2024 - 01:26 AM |

|

RULES AND GUIDELINES Please read the Forum Rules and Guidelines before posting. IMAGE COPYRIGHT |

OPINIONS AND MODERATION Opinions expressed on UnmannedSpaceflight.com are those of the individual posters and do not necessarily reflect the opinions of UnmannedSpaceflight.com or The Planetary Society. The all-volunteer UnmannedSpaceflight.com moderation team is wholly independent of The Planetary Society. The Planetary Society has no influence over decisions made by the UnmannedSpaceflight.com moderators. |

SUPPORT THE FORUM Unmannedspaceflight.com is funded by the Planetary Society. Please consider supporting our work and many other projects by donating to the Society or becoming a member. |

|