MSL data in the PDS and the Analyst's Notebook, Working with the archived science & engineering data |

|

MSL data in the PDS and the Analyst's Notebook, Working with the archived science & engineering data |

Apr 10 2016, 07:42 PM Apr 10 2016, 07:42 PM

Post

#166

|

|

|

Member    Group: Members Posts: 306 Joined: 4-October 14 Member No.: 7273 |

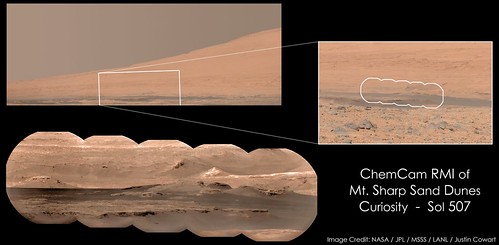

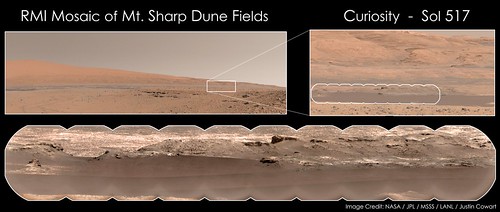

Just a reminder of how I like to show a full sky (cylindrical projection) sky brightness on Mars, based on some scattering calculations for a progression of solar elevation angles. http://laps.noaa.gov/albers/allsky/mars/al...gb_cyl_mars.gif Thanks, I'll keep that in hand for future panoramas! Here are a couple of RMI observations of dune fields around the base of Mt. Sharp on Sols 507 and 517.   Also had the opportunity to do another bit of HDR-type photography with a sunset (er, Earthset) picture acquired on Sol 529:  For this I used PIA17936 as a base, then added some foreground images of Gale Crater acquired during the afternoon of Sol 530. The brightness has been boosted a bit to subjectively match what I think a dark-adapted human eye would see, but there was some distinction between the foreground ridge (the north wall of Dingo Gap) and the crater rim in the original picture. If you're having any trouble download these, click on the pictures to go to the gallery page, and then add 'sizes/o' to the end of the url. That should bring up the full-size image, which you can then right-click and save as. |

|

|

|

Apr 15 2016, 04:55 PM Apr 15 2016, 04:55 PM

Post

#167

|

|

|

Member    Group: Members Posts: 306 Joined: 4-October 14 Member No.: 7273 |

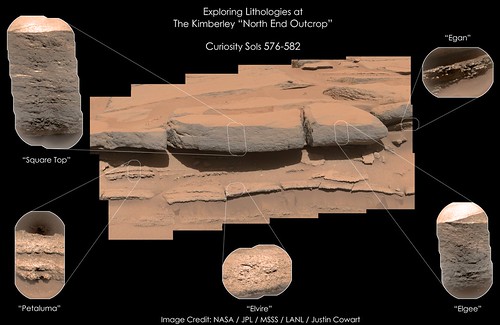

A group of RMI mosaics targeting The Kimberley's "North End Outcrop", showing some of the varied lithologies on display there.

It's really neat to see the variation in grain sizes. The bottom layers have a wide variation in grain size , while the upper layers are much finer overall and almost have a laminated texture to them. Really neat outcrop to study in general. |

|

|

|

Apr 21 2016, 12:12 AM Apr 21 2016, 12:12 AM

Post

#168

|

|

|

Member    Group: Members Posts: 306 Joined: 4-October 14 Member No.: 7273 |

Phobos setting behind Mt. Sharp.

Moonset Behind Mt. Sharp - Sol 613 by Justin Cowart, on Flickr For this one I did some noise reduction on a single frame taken by MastCam (M-34) on the evening of Sol 613. The composition of that image wasn't very balanced, so I added a bit of foreground in using some frames from a 360 mosaic taken on Sol 610. Fortunately, these frames were taken in the anti-solar direction - almost no shadows! I adjusted the color to match the illumination conditions better, and blended them all together. I also boosted the contrast on Phobos a little bit to bring out a couple of surface features. I hope you all enjoy it! |

|

|

|

Apr 21 2016, 06:48 AM Apr 21 2016, 06:48 AM

Post

#169

|

|

Member    Group: Members Posts: 809 Joined: 3-June 04 From: Brittany, France Member No.: 79 |

Amazing work Justin! Thanks for sharing it with us.

-------------------- |

|

|

|

May 18 2016, 09:33 AM May 18 2016, 09:33 AM

Post

#170

|

|||

Member    Group: Members Posts: 244 Joined: 2-March 15 Member No.: 7408 |

Not really sure the best place to post this, so I'll post it here.

Since Friday, a friend and I have been working to try to generate a tolerable VR experience chronicling the journey toward Mt. Sharp from Curiosity's landing site. I don't know if we've reached tolerable yet, but we've decided to stop working on it for now as it consumes a tremendous amount of storage and processing resources that we need for other things, so we've decided to share the two main products that have come out of it. The first is a 900+ frame 360-degree video (assuming your browser/platform supports playback of 360 YouTube videos) at 4K resolution that combines 58,589 images from MASTCAM, MARDI, and the Left NAVCAM. https://www.youtube.com/watch?v=qHJemCKbhF4 Each frame represents a distinct drive counter at which imagery was acquired by any of the three cameras included. Each frame of new imagery is drawn over the previous frame and, at times, it may just be a small area that is changing from frame to frame. The MARDI and MASTCAM imagery is forced to grayscale for consistency with the Left NAVCAM which provides the bulk of the coverage. We keep all of the imagery oriented based on the site frame (which is aligned to NSEW) rather than the rover nav frame, so Mt. Sharp stays in the same place throughout the entire video. The second is a headache-inducing (though still fairly immersive) 3-D 360 VR video at 5K resolution, intended to be viewed with a stereoscopic headset (Cardboard and the like). It's kind of like you're riding atop Curiosity as it makes its way toward Mt. Sharp. https://www.youtube.com/watch?v=jgjse9fGypA The left eye is seeing imagery from the Left NAVCAM and the Left MASTCAM. The right eye is seeing imagery from the Right NAVCAM and the Right MASTCAM. Somewhere approaching 100,000 (probably in the 75,000-85,000 range) images from these cameras are combined to create this video. Color imagery has been allowed (in fact, preferred) when available, and the rest of the imagery has been tinted by a vertical gradient calculated from the available color imagery to reduce the headache you'll experience as your brain tries to match NAVCAM imagery in one eye to MASTCAM imagery in the other. For this video, rather than present every drive counter with imagery as its own frame, the 900+ frames originally generated by the process were further processed to guarantee that significant changes over at least half of the horizontal range must occur before a new frame is allowed, reducing the frame set to a final length of 361 frames, played here at 2 frames per second. |

||

|

|

|||

May 19 2016, 08:20 AM May 19 2016, 08:20 AM

Post

#171

|

|

|

Junior Member   Group: Members Posts: 59 Joined: 18-July 07 From: London, UK Member No.: 2873 |

Herobrine - I really admire this attempt, thanks for sharing!

I have to declare an interest, as I recently got a small grant in the UK to trial using VR for giving public engagement talks about Mars. We're going to build a simple app for Google Cardboard, and provide all the viewers for the audience, but the one thing I am lacking at the moment is a good set of image data. It'll most likely involve a tour through MSL's mission so far, and so I've been trying to collate specific images that might be good for VR. So seeing you put all the images together in a VR video was very impressive! But to keep the size of the app down we're unlikely to run video, and just use a series of images instead. So I was wondering if you know of any good sources for these VR scenes, or have outputted any particular good VR 'frames' from your videos that could be used by others. Of course, any other tips would be a great help. Keep up the good work! |

|

|

|

May 19 2016, 06:24 PM May 19 2016, 06:24 PM

Post

#172

|

|

Member    Group: Members Posts: 244 Joined: 2-March 15 Member No.: 7408 |

Well, I'll say that I wouldn't recommend using the frames we produced for the video. The video frames were produced by an entirely automated process; no manual work was done on any of the input images or output frames. The resulting frames suffer from a myriad of issues. To name a few: there are extreme NAVCAM frame brightness inconsistencies; nothing is stitched (it's all just projected naively using the camera model defined by the input data product); all sorts of different undesirable imagery is included in the frames (some minimal filtering was incorporated into the automated process, like not including images with negative solar elevations, but images of calibration targets, checkouts of instruments, MASTCAM imaging of the arm/turret, etc. still appear); floating hilltops and rover structure get left behind until new imagery finally covers them; MASTCAM and NAVCAM imagery are blindly included together for each eye, despite MASTCAM having a 24.5 cm stereo baseline while NAVCAM's is 42.4 cm (they're not even close). Some of those issues contribute heavily to the headache-inducing quality of the video, but most are minimized by the motion and constantly changing imagery, so your brain can smooth out some of the flaws and inconsistencies temporally and make some sense of it.

When you're dealing with a single static frame, you're going to want a much more consistent, seamless full-sphere stereo pair, which means careful selection and manual editing. A typical human has an interpupillary distance of around 6.5 cm, so MASTCAM's 24.5 is more reasonable than NAVCAM's 42.4. Between the two, MASTCAM is also the only one that acquires color imagery, so it has that going for it, which is nice. The HAZCAMs get you much closer to a human stereo baseline but they're just too low to the ground and you're never going to get decent coverage using them. MASTCAM and NAVCAM are at a pretty good height. They're up at the height of a freakishly tall person's eyes, which is fine; the extra height helps a little with the exaggerated stereo baseline. For the sky, I'd recommend not using MSL imagery at all. scalbers has a nice GIF of simulated Martian sky scattering for different solar elevations somewhere around here. ....Here it is: http://laps.noaa.gov/albers/allsky/mars/al...gb_cyl_mars.gif I'd pick a solar elevation you like from there and use that as your sky. For the rest, ideally, you'd identify places where Curiosity acquired 360 MASTCAM mosaics and use that for most of the lower half of your sphere. Different MASTCAM mosaics yield different coverage, so identifying the ones with the most imagery would be helpful. One of your biggest challenges is likely to be getting decent right-eye MASTCAM coverage. There are a fair number of left MASTCAM mosaics with decent coverage, but decent right MASTCAM coverage is hard to find. I would have looked into that early this morning, because I'm interested too, but all of the MSL data volumes in PDS, except for MSLNAV_1XXX, vanished last night and only just reappeared about 5 minutes ago (and have been phasing in and out of some transient existence since then, but mostly seem to be back now). I'm pretty sure I've seen at least one place where right MASTCAM had pretty good sphere coverage; I'll check later. Once you have a sky and some decent-though-patchy MASTCAM coverage, you're mostly left with NAVCAM to fill in the rest (MAHLI has good coverage at times, since it does self-portraits, but that's never going to combine with MASTCAM imagery for good stereo). You could just fill in the blanks with NAVCAM and tint it with a hue and saturation similar to the surrounding MASTCAM imagery, but you're going to have depth inconsistency across that boundary due to the difference in stereo baseline. Compared to most VR experiences I've viewed, that probably isn't the worst thing in the world. It would be possible, however, using the XYZ data products for NAVCAM, to reproject the surface geometry from a right NAVCAM frame into the camera model for the right MASTCAM, which would correct the depth inconsistency. I've actually done that before with good results. The parallax from the right NAVCAM to the right MASTCAM is pretty small, so occlusion doesn't cause much of a problem for reprojection (at least, it won't in most cases). NAVCAM's XYZ data only reaches out to a certain distance, so you won't be reprojecting Mt. Sharp (not that you'd need to) or anything, but it's likely that most of your MASTCAM coverage gap is going to be near the bottom of your sphere anyway, so a lack of geometry data near the horizon isn't likely to be a big issue. For the very bottom of the sphere, you might get lucky and find a MARDI frame at that spot, but I guess that might not even matter, depending on whether you want them to see terrain or rover deck when they look down. Tonight, if I have time, I'll run a script over the MASTCAM index file and have it spit out places with the best right (and left) MASTCAM coverage. I'll let you know what I find. |

|

|

|

May 19 2016, 08:26 PM May 19 2016, 08:26 PM

Post

#173

|

|

Junior Member   Group: Members Posts: 30 Joined: 8-September 14 From: London, UK Member No.: 7254 |

Herobrine - I really admire this attempt, thanks for sharing! I have to declare an interest, as I recently got a small grant in the UK to trial using VR for giving public engagement talks about Mars. We're going to build a simple app for Google Cardboard, and provide all the viewers for the audience, but the one thing I am lacking at the moment is a good set of image data. This sounds a bit like my Mars View Android app (inspired by Midnight Planets for iOS), and I now live in London. I can't be involved in your grant since I'm otherwise employed, but perhaps we should chat (in person, if you're also in London)? |

|

|

|

May 19 2016, 09:09 PM May 19 2016, 09:09 PM

Post

#174

|

|

Member    Group: Members Posts: 244 Joined: 2-March 15 Member No.: 7408 |

I did a scripted check of the MASTCAM index file, filtering out thumbnails (I only allowed letters C and E), additional versions of another image (C00 vs E01 vs E02), anything monochromatic, anything taken when the Sun was below the horizon, anything labeled as a "redo" or "reshoot" (to avoid double counting), anything described as being for calibration, and anything not actually imaging the Martian terrain (instrument assessment, Sun imaging, etc.) and, given those criteria, it looks like Site 6 Drive 0 is the winner for most right MASTCAM coverage, at least in terms of number of images (there may be some overlapping areas of coverage).

The right MASTCAM acquired useful (for your purposes) imagery from that position on Sols 166, 172, 173, 179, 184-186, 188, 189, 192-194, 198, 199, 227, 232-234, and 269-271, for a total of 1,163 images. Next best were (Site/Drive): 5/104 with 858 images 6/704 with 570 images 5/388 with 537 images 31/1330 with 487 images 6/804 with 338 images 5/1858 with 287 images 30/740 with 275 images 48/1570 with 220 images There's a pretty big gap where no drive positions have 200 or more right MASTCAM images. The best right MASTCAM image count between Site 7 and Site 29 was at 26/708 with 142 images. Between Site 7 and there, the best I found was at 15/1230 with 109 images. I checked the rationale descriptions for all of the counted images at each of the drive positions listed above to make sure none of the images that survived the filtering sounded like something that shouldn't be included, so those should be reliable numbers. Edit: Performing the same scripted check, but for left MASTCAM, the best (any position with over 200 images) were: 6/0 (again) with 1,135 images 31/1330 with 701 images 5/388 with 626 images 6/704 with 570 images 6/804 with 379 images 5/1858 with 378 images 31/0 with 356 images 30/740 with 302 images 49/1216 with 291 images 27/1124 with 241 images 50/848 with 237 images 48/1570 with 220 images 49/2626 with 213 images 26/1102 with 211 images The ones I listed separately for right MASTCAM, 26/708 and 15/1230, had 170 and 166 left MASTCAM images, respectively. 5/104, which had 858 for right had only 64 for left, but keep in mind that the left MASTCAM has a larger field of view, so fewer are needed to provide good coverage. |

|

|

|

May 19 2016, 09:19 PM May 19 2016, 09:19 PM

Post

#175

|

|

Administrator     Group: Admin Posts: 5172 Joined: 4-August 05 From: Pasadena, CA, USA, Earth Member No.: 454 |

Also good would be the MAHLI self-portraits.

The Rocknest and John Klein sites are the best in terms of moderately complete 360-degree coverage in both left and right eyes, but of course Mastcam never imaged the deck until Namib Dune so even relatively complete pans are frustratingly incomplete for VR purposes. I worked with some folks at Google to do some Cardboard VR projects with Mars data and we found it much more satisfying to go back to Spirit data. The Spirit story was highly satisfying as told through Google Cardboard VR. -------------------- My website - My Patreon - @elakdawalla on Twitter - Please support unmannedspaceflight.com by donating here.

|

|

|

|

May 19 2016, 10:14 PM May 19 2016, 10:14 PM

Post

#176

|

|

Junior Member   Group: Members Posts: 30 Joined: 8-September 14 From: London, UK Member No.: 7254 |

I worked with some folks at Google to do some Cardboard VR projects Emily, would you mind telling me through private message (here or via public@tobias-thierer.de) who at Google you worked with? I work at Google myself and would love to connect with like minded co-workers. Alternatively tell them my name (Tobias Thierer) and ask them to contact me internally. |

|

|

|

May 20 2016, 02:35 AM May 20 2016, 02:35 AM

Post

#177

|

||

Member    Group: Members Posts: 244 Joined: 2-March 15 Member No.: 7408 |

@pgrindrod

I put together an example of some of the stuff I suggested. https://www.youtube.com/watch?v=UyviCsjGsfg You'll probably want to pause it after it starts because it only lasts 10 seconds. That's all of the Site 6 Drive 0 MASTCAM imagery that survived the filtering criteria I mentioned before. The left eye is made from 1,133 ML frames and the right eye is made from 1,100-some MR frames. Still no stitching, and the 360 mosaic was generated automatically by a program I wrote, but I did do some manual combining of a version that averages overlapping imagery and a version that just draws the newer imagery on top of the older imagery. I took a frame from scalbers' GIF and overlaid some extra dust on it to better match the imagery at the horizon and put that behind everything as my sky. I didn't try to add NAVCAM imagery as filler yet; I might later. At least this serves as a reference for what you can get with minimal effort and best-case imagery availability. |

|

|

|

||

May 25 2016, 06:53 PM May 25 2016, 06:53 PM

Post

#178

|

|

Junior Member   Group: Members Posts: 43 Joined: 14-December 12 Member No.: 6784 |

The amazing blender plugin and video by phase4 inspired me to pick up blender and try it out. Here are some results!

A black&white tour skirting around Mount Remarkable back at the Kimberley: https://www.youtube.com/watch?v=qwrqC3CDukI A color video near Mount Remarkable, this time over a much more limited area. Here I manually warped mastcam color imagery to the navcam meshes. https://www.youtube.com/watch?v=pHKYYaswDkA A combination of MGS HRSC, MRO HiRise, Navcam and Mastcam data to create a large sweeping path over both Mount Sharp all the way back down to the Kimberley again, all in color, this time with a little more movement around Mount Remarkable. This last video had some serious rendering artifacts so the camera movement is a little awkward to pan/crop all those bits out (watch out if you are easily made motion-sick!). https://www.youtube.com/watch?v=ik-nW1RMzaM...eature=youtu.be A second version of the first b&w video with the levels unadjusted: https://www.youtube.com/watch?v=mrZYHkRZ7-g Enjoy! |

|

|

|

Jun 1 2016, 10:09 AM Jun 1 2016, 10:09 AM

Post

#179

|

|

Junior Member   Group: Members Posts: 43 Joined: 14-December 12 Member No.: 6784 |

Here is one more again using Phase4's plugin, comprising 3 separate sites; one on sol 595, and two on sol 603. All have been colorized using Mastcam images.

https://www.youtube.com/watch?v=WzP3Is700cY |

|

|

|

Jun 1 2016, 10:42 AM Jun 1 2016, 10:42 AM

Post

#180

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

Many thanks for sharing your incredible work Sittingduck! This is exactly the kind of thing I wanted to see but my Blender-fu was not up to task.

If I can suggest possibly making the videos longer...a lot longer. There is a lot to see! The transition from Mt Sharp to Remarkable was especially good. Really looking forward to seeing what you come up with next. -------------------- |

|

|

|

|

|

Lo-Fi Version | Time is now: 19th April 2024 - 10:57 AM |

|

RULES AND GUIDELINES Please read the Forum Rules and Guidelines before posting. IMAGE COPYRIGHT |

OPINIONS AND MODERATION Opinions expressed on UnmannedSpaceflight.com are those of the individual posters and do not necessarily reflect the opinions of UnmannedSpaceflight.com or The Planetary Society. The all-volunteer UnmannedSpaceflight.com moderation team is wholly independent of The Planetary Society. The Planetary Society has no influence over decisions made by the UnmannedSpaceflight.com moderators. |

SUPPORT THE FORUM Unmannedspaceflight.com is funded by the Planetary Society. Please consider supporting our work and many other projects by donating to the Society or becoming a member. |

|