Juno PDS data |

|

Juno PDS data |

Mar 2 2018, 10:51 AM Mar 2 2018, 10:51 AM

Post

#76

|

|

|

Senior Member     Group: Members Posts: 2346 Joined: 7-December 12 Member No.: 6780 |

Without any warranty, not even tested, just in terms of a manually modified SPICE instrument kernel, here the entirely heuristical Brownian distortion parameters, and focal length in ik notation I've been mostly working with over the last year or so.

juno_junocam_v02_ge01.txt ( 15.32K )

Number of downloads: 683

juno_junocam_v02_ge01.txt ( 15.32K )

Number of downloads: 683(rename extension from txt to ik) Those are without any formal empirical justification, and subject to change without prior notice. I'm planning to work on a refined and empirically justified version, but not in the short run. |

|

|

|

Mar 2 2018, 08:48 PM Mar 2 2018, 08:48 PM

Post

#77

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

Juno28g, a JunoCam raw processing pipeline implemented in Mathematica, is available under permissive open source license at https://github.com/BrianSwift/JunoCam

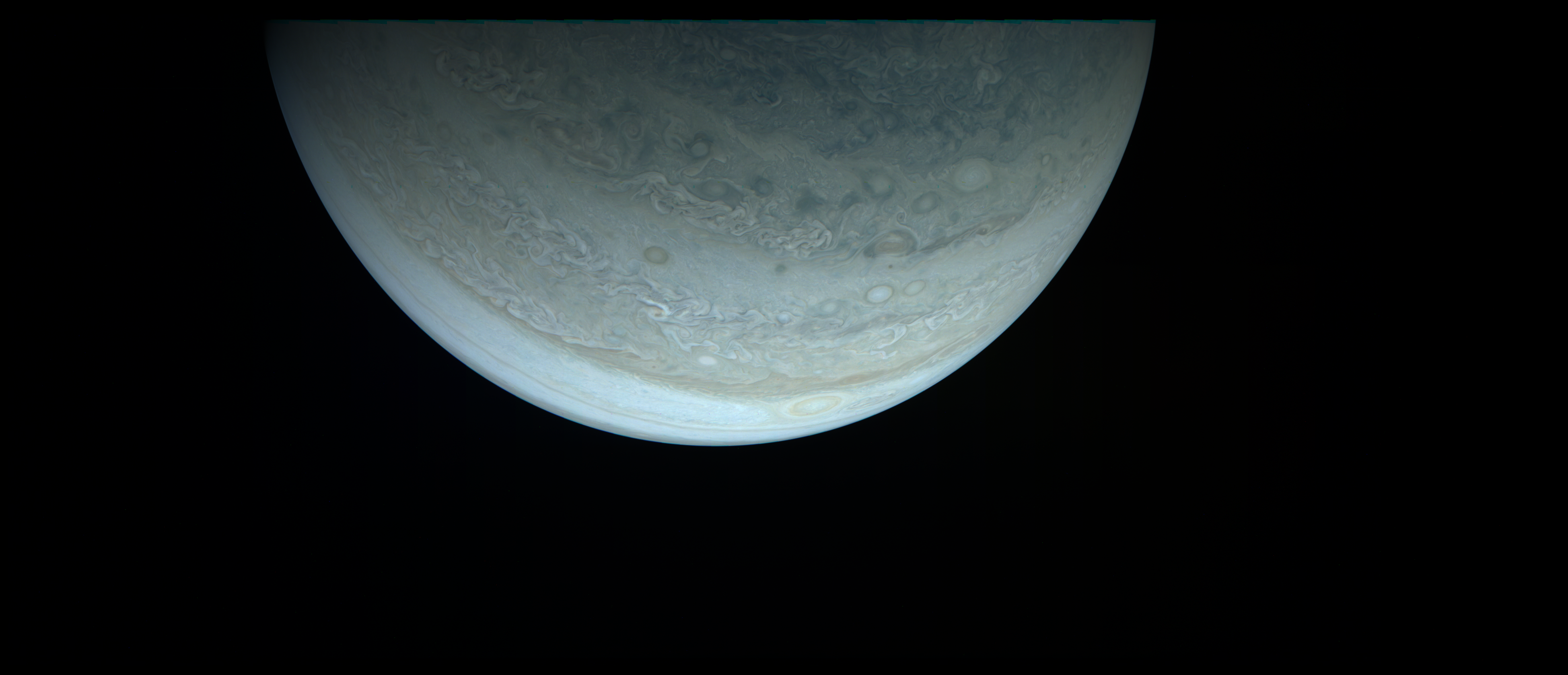

This basic pipeline implements pixel value decompanding, lens distortion correction, equirectangular projection, and assembly of raw frames to a final image. Only a per-channel scaling is applied for color balance, leaving beautification in Photoshop to the userís artistic interpretation. Produces usable images with defaults. But the appearance of seams can be reduced by refinement of parameters related to Juno spin rate and JunoCam orientation relative to the spin axis. Works with MissionJuno website formatted -raw.png and PDS .IMG or .IMG.gz raw image files. Output format is 16-bit PNG. Produces approach/departure movies. The default lens model is Brown K1,K2,P1,P2. However, more baroque models involving chromatic aberration and principal point offsets are supported. PJ11_27 processed with this pipeline:

|

|

|

|

Mar 3 2018, 02:11 AM Mar 3 2018, 02:11 AM

Post

#78

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

Thanks for sharing this Brian...very keen to wrap my head around Mathematica in order to get my mitts on some equirectangular projection!

-------------------- |

|

|

|

Mar 3 2018, 05:21 PM Mar 3 2018, 05:21 PM

Post

#79

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

Thanks for sharing this Brian...very keen to wrap my head around Mathematica in order to get my mitts on some equirectangular projection! Your results are looking great. If seams/limb-steps are noticeable in a high-altitude image try changing rExtrinsic to 0.0 or -0.002. This may not help with seams in lower altitude images since the pipeline doesn't compensate for parallax. |

|

|

|

Mar 3 2018, 10:00 PM Mar 3 2018, 10:00 PM

Post

#80

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

Ah thankee! I've fed 214 raw files into the script so it should be finished in about 20 hours.

Do the seams refer to the fringing on high contrast features? I've taken to aligning/puppet-warping these by hand for selected files. -------------------- |

|

|

|

Mar 3 2018, 10:43 PM Mar 3 2018, 10:43 PM

Post

#81

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

Do the seams refer to the fringing on high contrast features? I've taken to aligning/puppet-warping these by hand for selected files. Seams would be sharp discontinuities along vertical lines, possibly starting at a misalignment along the terminator. The fringing may be do to misalignment caused by rExtrinsic being off, or possibly by parallax shifts between framelets, that later of which I've really only recently started thinking about. While I haven't tried this yet, if the problem is due to parallax, you could try changing spiceYawRate which might improve alignment in some areas and make it worse in others, and then you could merge good regions. 1/27 degree change is about one pixel. |

|

|

|

Mar 4 2018, 07:16 AM Mar 4 2018, 07:16 AM

Post

#82

|

||

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

Seams would be sharp discontinuities along vertical lines, possibly starting at a misalignment along the terminator. Meant limb, not terminator. The left image (processed with default rExtrinsic = 0.00159) shows the limb discontinuity, and seams stretching vertically from the discontinuities. Right image (processed with rExtrinsic = -0.002) reduces the discontinuities, seams, and also the limb coloring artifacts. (Unfortunately, in this case, reducing the artifact on one side increases it on the other side [not shown]). |

|

|

|

||

Mar 4 2018, 05:07 PM Mar 4 2018, 05:07 PM

Post

#83

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

Thank you for the explanation.

I have noticed vertical offsets on each of the R, G, B channel strips which I show in a tweet here; R, G, B I presume this has to do with Juno's orbit and the parallax shifts between framelets you mentioned? I would rather have accuracy in foreground areas since pixel discrepancies fall off with distance, which would be fine for my purpose at least. The script has been running merrily since last night with nary a hiccup, it is very impressive! -------------------- |

|

|

|

Mar 4 2018, 10:21 PM Mar 4 2018, 10:21 PM

Post

#84

|

||

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

I have noticed vertical offsets on each of the R, G, B channel strips which I show in a tweet here; R, G, B I presume this has to do with Juno's orbit and the parallax shifts between framelets you mentioned? I would rather have accuracy in foreground areas since pixel discrepancies fall off with distance, which would be fine for my purpose at least. I'm not sure which image you are working on in the tweet. But I think the fringing here in PJ11_13 is due to the basic nature of my software assuming the spacecraft doesn't move significantly in the .6 sec between red and blue exposures. However, for PJ11_13 the distance to the surface is changing from 8289 to 8072m while the entire image is being collected. Also, disregard what I said about changing spiceYawRate, it probably won't help with this. The script has been running merrily since last night with nary a hiccup, it is very impressive! Glad it's proved entertaining. I've been running it over the past few nights. |

|

|

|

||

Mar 4 2018, 10:31 PM Mar 4 2018, 10:31 PM

Post

#85

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

The example image I used was PJ08_118

-------------------- |

|

|

|

Mar 5 2018, 02:50 AM Mar 5 2018, 02:50 AM

Post

#86

|

|

|

Member    Group: Members Posts: 923 Joined: 10-November 15 Member No.: 7837 |

'Perijoves'

214 images, 11 perijoves, 3.15 billion pixels Gigapan *update: that includes all the black space I added, more like 1.8 billion pixels*

-------------------- |

|

|

|

Mar 5 2018, 04:38 AM Mar 5 2018, 04:38 AM

Post

#87

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

'Perijoves' 214 images, 11 perijoves, 3.15 billion pixels Gigapan Very cool! You've run more perijove data through the pipeline than I have. |

|

|

|

Mar 5 2018, 05:17 AM Mar 5 2018, 05:17 AM

Post

#88

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

'Perijoves' 214 images If you have all the meta-data and .mx.gz files , you could try evaluating "Create Movie" (after manually executing Startup, Controlling Parameters, Definitions and Functions, and skipping "Raw to Final Image Processing Pipeline".) I have no idea what it will do when given multiple perijoves. |

|

|

|

Mar 5 2018, 09:19 AM Mar 5 2018, 09:19 AM

Post

#89

|

|

|

Member    Group: Members Posts: 427 Joined: 18-September 17 Member No.: 8250 |

I have noticed vertical offsets on each of the R, G, B channel strips which I show in a tweet here; R, G, B I added a feature intended to help anyone who is interested in shifting color channels around to remove fringes, or playing with seams... exportFullFrames: Export each corrected full frame image as a separate PNG file. Load these into Photoshop as layers via "File -> Scripts -> Load Files into Stack...",and then select all layers and change blending mode to "Lighten". Also, if you are manually removing dark spots, it might be easier (more automate-able) to perform the edits in the raw file before running it through the pipeline. (Fixes should repeat every 3*128=384 lines.) Just don't change the raw file name, the pipeline parses out the various fields encoded in the name. |

|

|

|

Mar 5 2018, 11:22 PM Mar 5 2018, 11:22 PM

Post

#90

|

|

IMG to PNG GOD     Group: Moderator Posts: 2254 Joined: 19-February 04 From: Near fire and ice Member No.: 38 |

True. If you're using the C kernel, any oscillation should be captured in the kernel. If you're not using the C kernel, then all bets are off. I still find it hard to tell in this whole exchange if people are using the C kernel, and if so, how and when. If there are errors in the angular offsets for Junocam in the I kernel and frames kernel, or if there's spacecraft motion not being captured in the C kernel, I'd like to know about those problems so they can be fixed. There are definitely differences between how we are doing this. My processing differs in some ways from what Gerald or Brian (or someone else) are doing although the fundamentals should be the same. Regarding C kernels, I always use orientation data from the C kernel for every R/G/B set of framelets (typically 25-40 sets per image) and I also do this for the spacecraft position. If possible, as a 'sanity check' I would be interested in knowing the typical pointing errors I should be getting if I use the START_TIME+0.068 seconds in combination with 'frame number' times (INTERFRAME_DELAY+0.001) as the time when extracting the pointing from the C kernel (I sometimes adjust the 0.068 value slightly). I determine the pointing errors using limb fits in images that have been geometrically corrected using the appropriate Brownian distortion parameters. The typical pointing error I get is ~10 pixels but this varies a bit (it's rarely less than ~3 or bigger than 20). The pointing correction varies a bit from the first framelet set to the last (using constant correction would increase the artifacts at the limb that have been discussed in the preceding posts and the color at the limb near the top and/or bottom can get messed up). I think everything I'm doing works correctly, partially because for images where I know the pointing to be accurate I get the expected result, i.e. no pointing error (example: the Cassini image subset where C-smithed C kernels are available). However, I'm not quite as sure as I'd like to be that everything is corrrect. The reason is that debugging this thing isn't completely trivial when I don't know exactly which result I should be getting (in particular I don't know how big the pointing error really is). However, it's probably encouraging that I have *probably* noticed artifacts in images not processed by me that could indicate pointing errors similar that what I've been getting. The pointing correction can be omitted (and I sometimes omit it). However, doing so can result in obvious color artifacts near the limb while artifacts far from the limb are much smaller and often not of significance unless you want very high geometric accuracy. |

|

|

|

|

|

Lo-Fi Version | Time is now: 26th September 2024 - 11:34 PM |

|

RULES AND GUIDELINES Please read the Forum Rules and Guidelines before posting. IMAGE COPYRIGHT |

OPINIONS AND MODERATION Opinions expressed on UnmannedSpaceflight.com are those of the individual posters and do not necessarily reflect the opinions of UnmannedSpaceflight.com or The Planetary Society. The all-volunteer UnmannedSpaceflight.com moderation team is wholly independent of The Planetary Society. The Planetary Society has no influence over decisions made by the UnmannedSpaceflight.com moderators. |

SUPPORT THE FORUM Unmannedspaceflight.com is funded by the Planetary Society. Please consider supporting our work and many other projects by donating to the Society or becoming a member. |

|